SEO Technical Site Audit [Template Included]

Last updated 12/29/2022

Jump links

- About this audit

- Google Search Console

- Google Index Coverage

- Robots.txt

- Meta robots tag

- X-robots-tag

- Error Responses

- Redirects

- Duplicate Content

- Canonical tags

- Site Security

- Mobile Best Practices

- XML Sitemap

- Title Tag

- Meta Description

- Page Not Found

- Text In Images

- Auditing Javascript

- Internal Linking

- Website Speed Best Practices

- Image Best Practices

- Structured Data Best Practices

- Heading Tags

- URL Best Practices

- Favicons

About this audit

Why is a technical site audit important?

If you implement technical SEO incorrectly, you may completely miss out on organic traffic, regardless of how much optimization you’ve performed on other aspects of your SEO strategy.

Are there any tools required for this audit?

Yes, this audit leverages the following tools:

- Google Search Console

- Screaming Frog

- Chrome browser

- Certain chrome extensions mentioned below

- Excel or Google Sheets

How often should you conduct this audit?

It depends on the website but here are some rules of thumb:

- Before and after any major website update.

- At least once a year. Quarterly is best.

Audit Checks

Is Google Search Console Configured?

Overview:

Google Search Console is a free online dashboard showing you how your site is doing in Google search. It allows you to track performance through metrics like impressions and clicks, identify issues like a page not showing in search results, and take manual actions like requesting Google crawl your XML sitemap.

Importance:

- Track your performance on Google

- Tools to troubleshoot why a page on your site may not be performing well

- Tools to help you inform Google when something on your site has changed

Checks to make:

See instructions below for how to conduct each check.

- Is Google search console configured?

Instructions for the checks above:

Check 1: To check whether you have Google Search Console enabled, follow the steps below:

Visit this URL. If you are directed to a welcome screen like the one below, click the “Start Now” button.

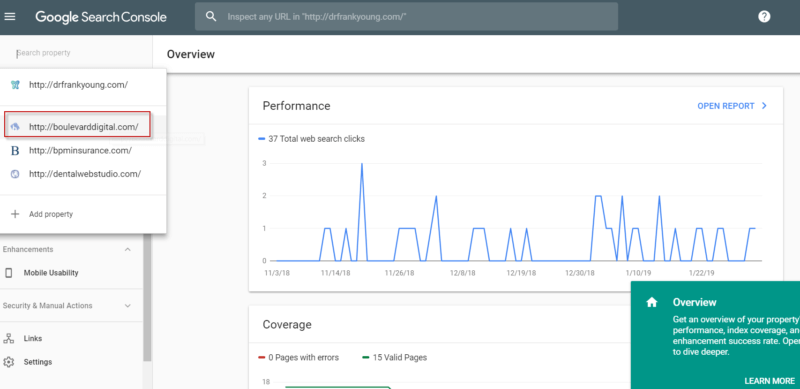

Check to see if your site exists in the drop down:

If you do not see your site in the top-left dropdown, it means that either the site has not been configured for Google Search Console OR your specific Google user account does not have access to an existing configuration.

Depending on your situation, you’ll either need to set up the site for Google Search Console using the instructions here, or request permission to access the existing Google Search Console configuration.

Additional resources:

Google Index Coverage

Overview:

Checks to see if your pages are eligible to show in Google search results.

Importance:

- If your pages are not showing in Google and other search engines, you are likely missing out on traffic to your site.

- If your pages are not showing in search engines, it may be a symptom of an underlying problem affecting other channels like Paid Search.

Checks to make:

See instructions below for how to conduct each check.

- Using Google Search Console, do you see any URLs in the “Not indexed” category?

- Are there URLs NOT in the index coverage report?

Instructions for the checks above:

Check 1:

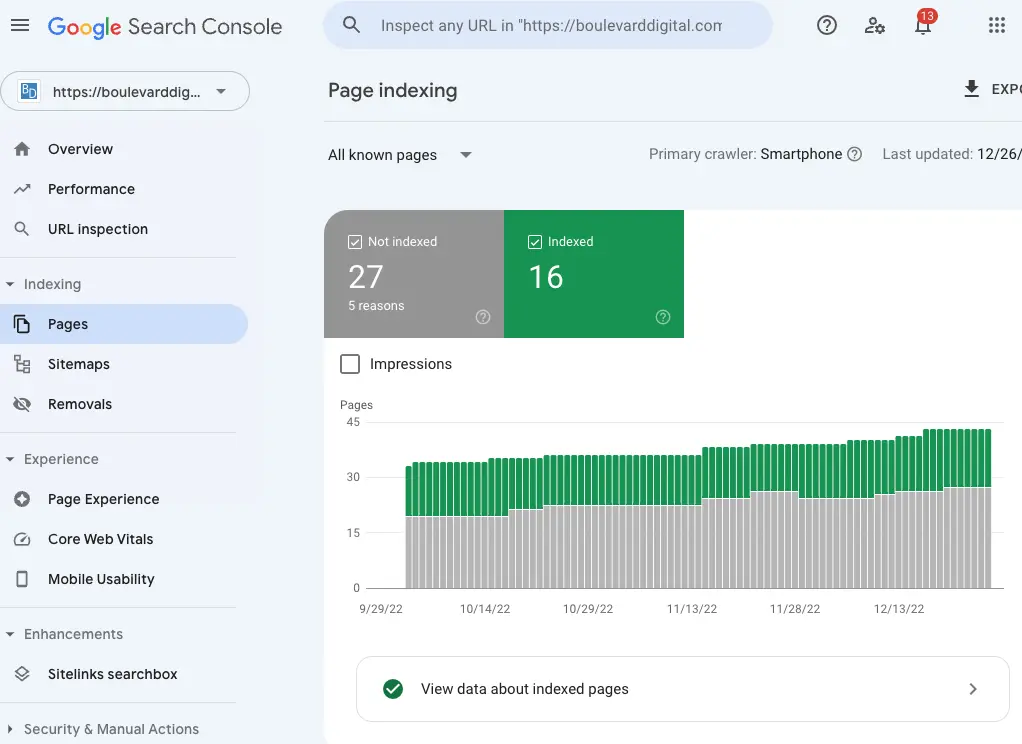

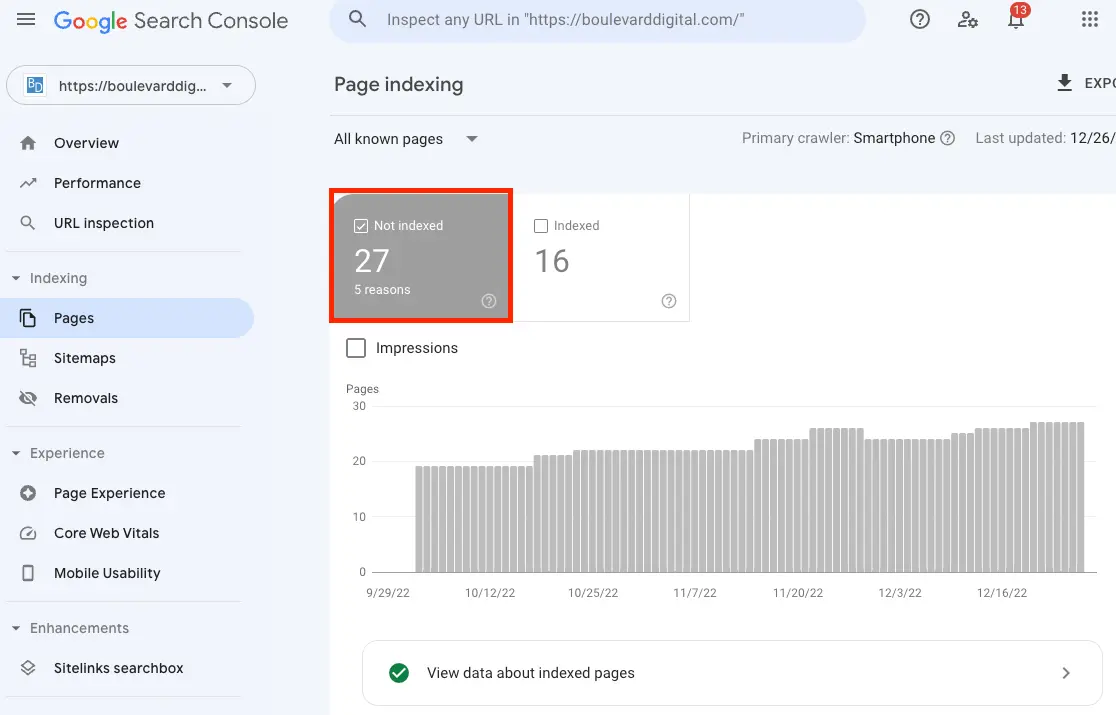

- Log into Google Search Console

- Navigate to Indexing > Pages in the left-hand navigation

- Investigate any potential issues in the “Not Indexed” category using the Google Search Console help center article here. This article will define each issue and tell you how to fix it.

Check 4:

- If the total URL count (sum of all categories) in the coverage report is less than your site’s total page count, then Google isn’t finding some pages on your site. This could be an issue if there are pages Google isn’t aware of that you want to rank. Pages not showing up in the coverage report could be due to multiple reasons such as pages not linked to from any other page on your site or pages not in the XML sitemap. Other checks in this audit will help you determine the root cause of this. For now, simply note which pages are not showing in the coverage report by following these steps:

- Download the coverage report

- Download a list of all pages from your website. If you have a CMS like wordpress, this should be easy using a plugin like this one. However, in certain circumstances, you may need to pull in a developer to download this list.

- Use a function like countif() to see if urls on the site page download exist in the coverage report download. Here’s a quick guide on using countif() for this task.

Additional Resources:

- None at this time.

Robots.txt file

Overview:

Robots.txt is a text file instructing search engines how to crawl your website. The instructions you provide to search engines through this file can influence what pages show in search results and how they display in search results. With that said, it’s important to get them right!

Importance:

- If implemented incorrectly, it can result in improper crawling of your site. Improper crawling can then result in:

- A page you want shown not appearing in search results.

- A page you do not want shown appearing in search results.

- A page not passing ranking power when it should.

- If implemented correctly, it allows you to choose which URLs you want blocked from search crawling.

Checks to make:

See instructions below for how to conduct each check.

- Does the robots.txt file exist at yoursite.com/robots.txt?

- Is the entire robots.txt file name lower case, including the “.TXT?”

- If the site has multiple subdomains like, blog.yoursite.com and us.yoursite.com, does each have its own robots.txt file?

- Does it reference the XML sitemap?

- Do all comments start with a pound symbol “#?”

- Does GSC show any resources being blocked that should not be?

Instructions for the checks above:

Checks 1-5: Navigate to yoursite.com/robots.txt

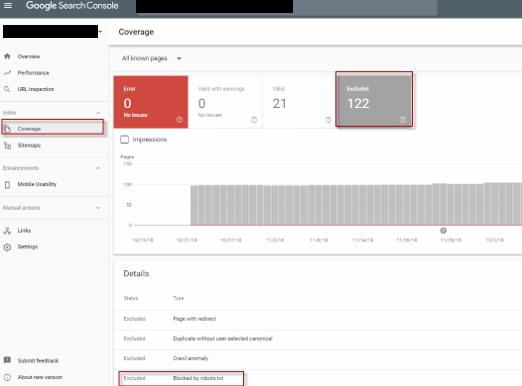

Check 6: To find resources blocked by robots.txt in GSC, navigate to Coverage > Excluded > Blocked by robots.txt

Additional Resources

Meta Robots tag

Overview:

A meta robots tag tells search engines 1) Whether to index a page and 2) Whether to follow the links on a page. More information about the meta robots tag can be found in the Moz article here.

Code sample:

Importance:

- If implemented the wrong way, it can hide URLs from users in search results and prevent crawlers from finding the rest of your site and passing ranking power. This is bad.

- If implemented correctly, it allows you to choose which URLs you want to show in search results as well as whether you want search engines to pass ranking power to the pages linked to from the page with the meta robots tag.

Checks to make:

See instructions below for how to conduct each check.

- Does each URL that should be included in search results have one of the following: 1) An absence of “noindex” (Google defaults to indexing in this case) or 2) “index” is listed.

- Does each URL that should not be included in search results have a “noindex” value?

- Are there any URLs with a “nofollow” value? Note that these internal URLs may have a “nofollow” for a reason but should be investigated.

Instructions for the checks above:

All checks:

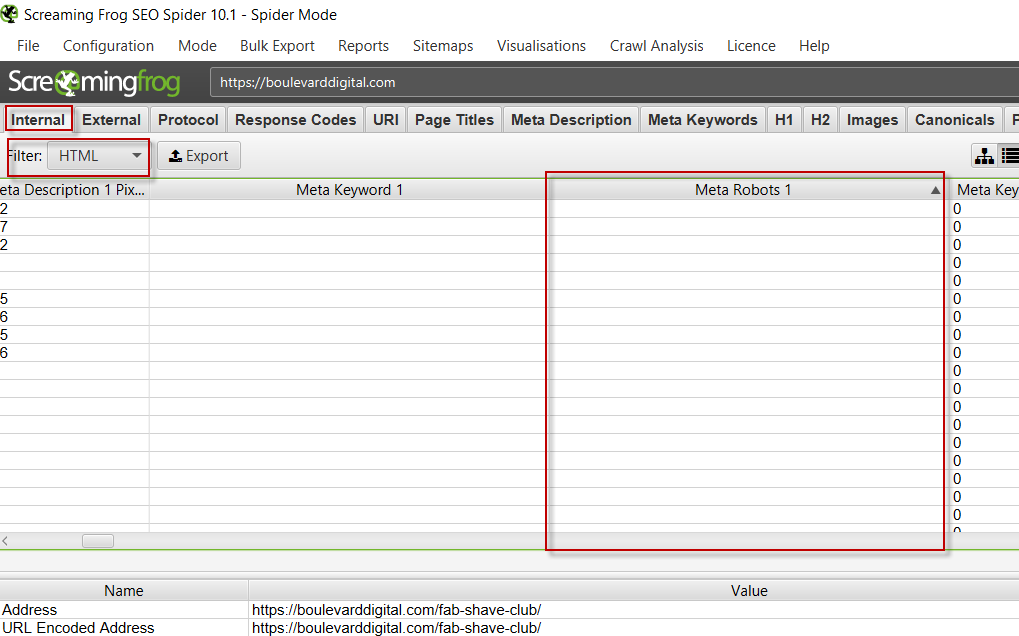

- Open screaming frog and crawl your site.

- Select the internal tab > filter for HTML > scroll right to find the “Meta Robots” column.

- For each URL, look in the Meta Robots column for the following:

- For URLs you DO want indexed, ensure the URL has one of the following: 1) An absence of “noindex” (Google defaults to indexing in this case) or 2) “index” is listed.

- For URLs you DO NOT want indexed, ensure “noindex” is listed.

- For URLs with a “nofollow” listed, question if there is a good reason for not following the links on that page.

Additional Resources:

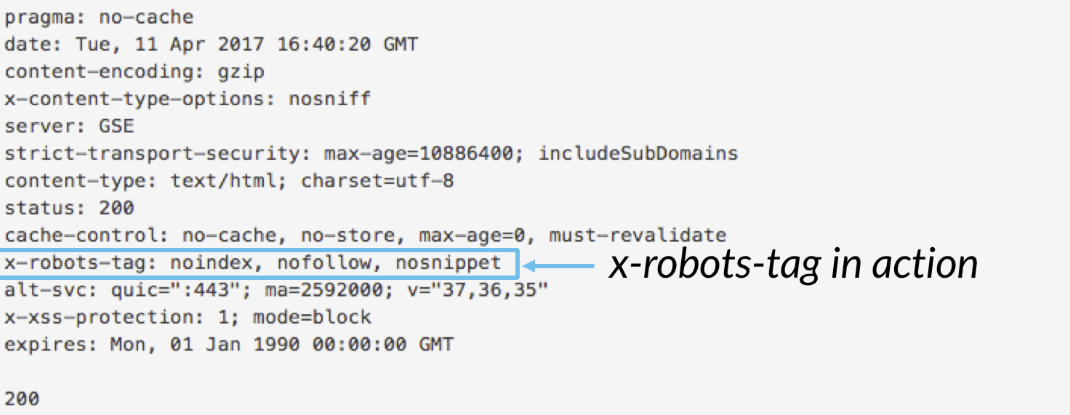

X-robots-tag

Overview:

An X-robots tag is another way to tell search engines 1) Whether to index a page and 2) Whether to follow the links on a page. More information about x-robot-tags can be found in the Moz article here.

Code Sample:

Importance:

- If implemented the wrong way, it can hide URLs from users in search results and prevent search engines from finding the rest of your site and passing ranking power. This is bad.

- If implemented correctly, it allows you to choose which URLs you want users to find in search results as well as whether you want search engines to pass link equity to the pages linked to from the page with the x-robots-tag.

Checks to make:

See instructions below for how to conduct each check.

- Does each URL that should be included in search results have one of the following: 1) An absence of “noindex” (Google defaults to indexing in this case) or 2) “index” is listed.

- Does each URL that should not be included in search results have a “noindex” value?

- Are there any URLs with a “nofollow” value? These internal URLs may have a “nofollow” for a reason but should be investigated.

Instructions for the checks above:

All checks:

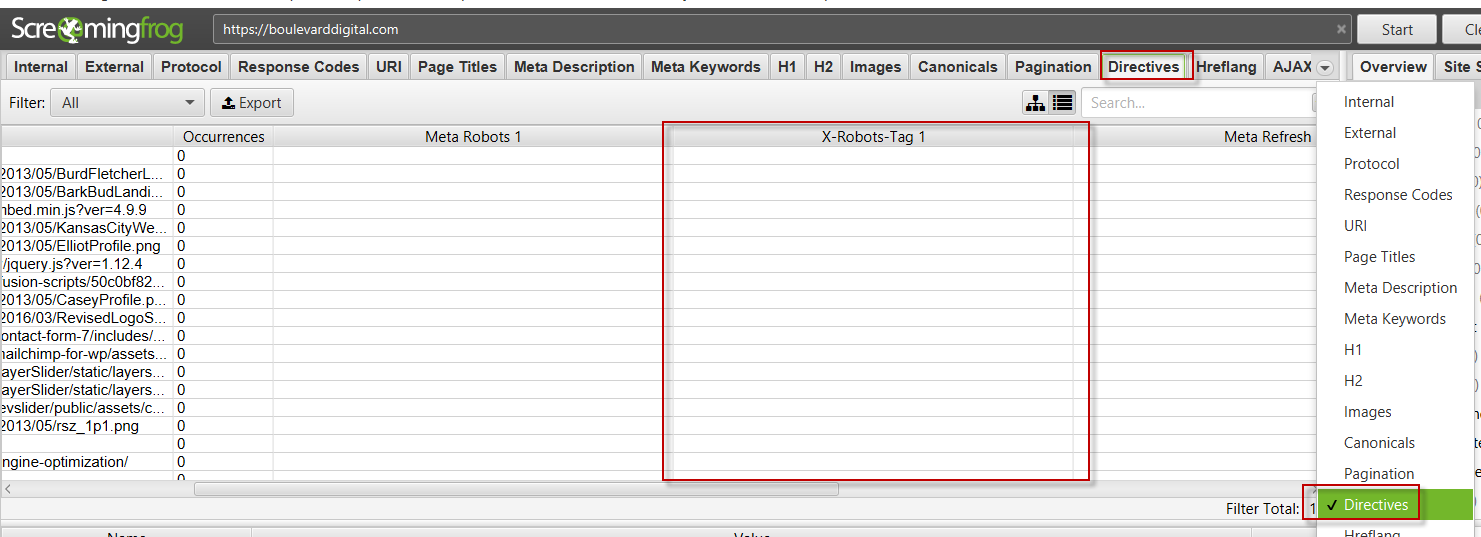

- Open screaming frog and crawl your site.

- Select the “Directives” tab.

- For each URL, look in the X-Robots-Tag column for the following:

- For URLs you DO want indexed, ensure there is either an absence of “noindex” (Google defaults to indexing in this case) or “index” is listed.

- For URLs you DO NOT want indexed, ensure “noindex” is listed.

- For URLs with “nofollow” listed, question if there is a good reason for not following the links on that page.

Additional Resources

Error Responses

Overview:

First, a reminder that some errors like 404s are not always bad. For instance, if someone tries to visit a page that has never existed on your website, by default you want to return a 404 so that 1) the user can be pointed in the right direction and 2) Google knows not to index this page.

However, these errors are bad in the following situations:

- You are linking to pages that return errors (like a 404) within your website.

- You have a page that does exist but is returning an error when users and search engines try to access it.

Importance:

- Errors can result in a poor user experience.

- Search engines may not rank you as high if you have numerous errors.

Checks to make:

See instructions below for how to conduct each check.

- Are there any 4XX errors?

- Are there any 5XX errors?

- Are there any no response errors?

Instructions for the checks above:

All checks:

- Open screaming frog to crawl the domain you are checking for errors.

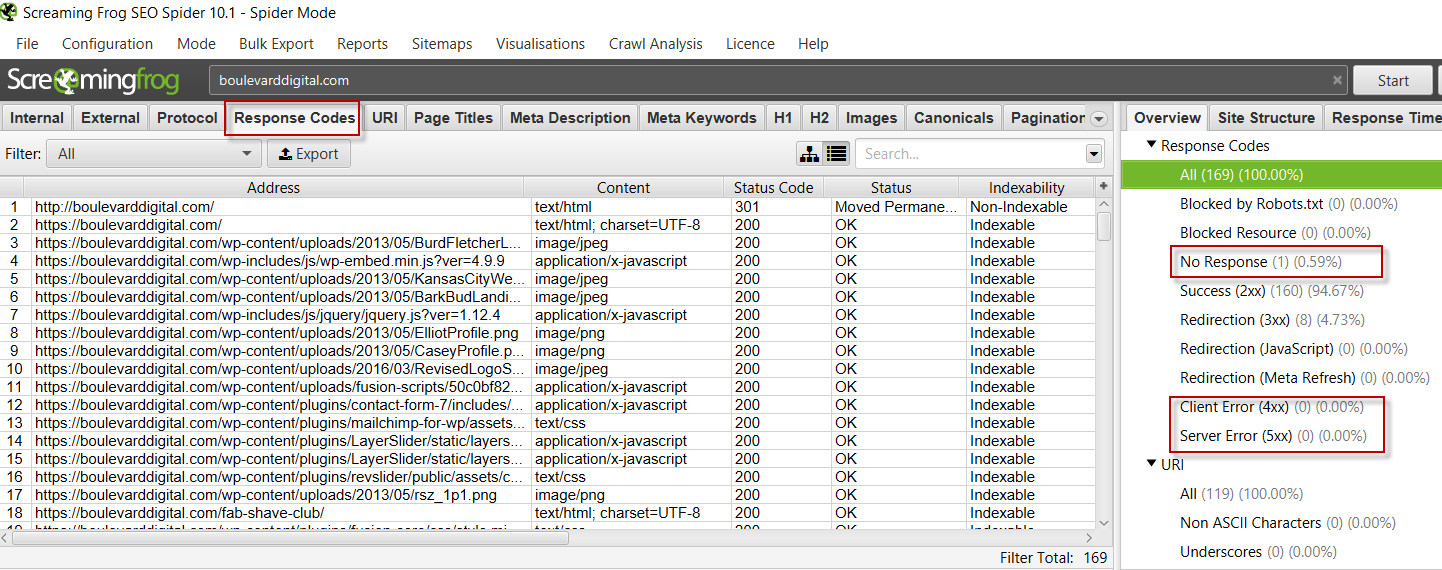

- Navigate to the Response codes tab (boxed in red) and then use the overview panel on the right to look for Client Errors (4XX), Server Errors (5XX) and No Response (boxed in red).

Additional Resources

- No additional resources at this time.

Redirects

Overview:

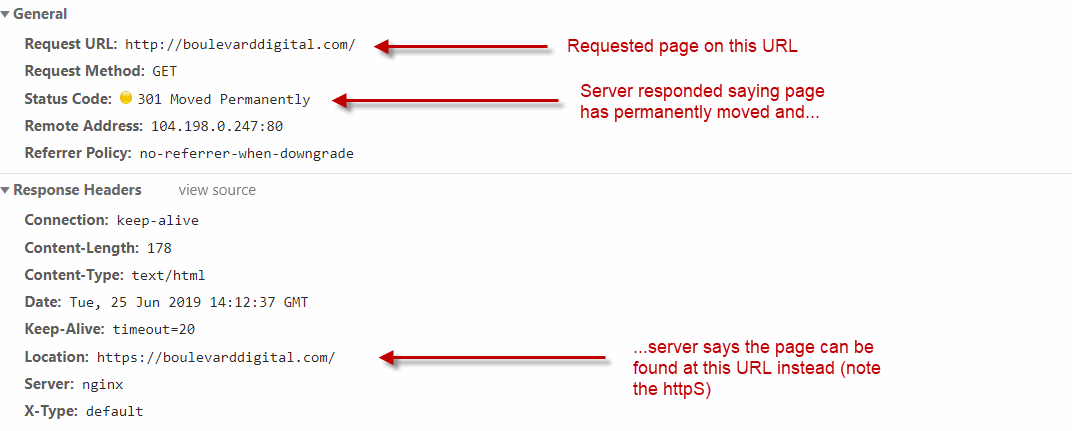

Redirects inform users and search engines a resource has moved from one URL to another URL. If you or a search engine requests content at a URL that has been moved, the server hosting the content (e.g. web page) responds saying the information is no longer available at the URL requested and to instead request the content at a new location. For the purpose of this audit, redirects have been divided into two categories:

- Page-to-page: These are redirects specific to one page. Here are some examples:

- https://www.yoursite.com/old-page 301 redirects to https://www.yoursite.com/new-page

- https://www.youroldsite.com/page 301 redirects to https://www.yournewsite.com/page

- Global redirect: These are redirects that apply to all pages, which is where “global” comes from. Here are some examples:

- Whenever someone enters a URL using HTTP (non-secure), redirect them to the URL using HTTPS (secure).

- Whenever someone enters a URL without WWW, redirect them to the URL with the WWW.

Code Sample:

Importance:

- Ensure users are redirected to the new page instead of receiving a 404 error message.

- Ensure search engines know which pages have been removed and replaced so they can reflect this in search results.

- Pass ranking power from the old URL to the new URL.

- Ensure users have a better experience by reducing page load time caused by unnecessary redirects.

Checks

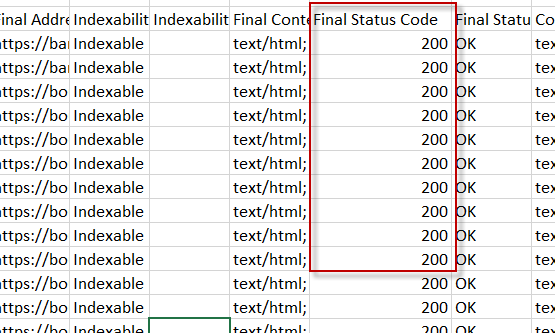

- Do all page-to-page redirects work properly including 1) The redirect type is implemented using HTTP versus a meta refresh tag or JavaScript. 2) Old URL redirects to the correct new URL 3) There is only one redirect 4) The HTTP redirect type is correct (e.g. 301 versus 302 if resource is moved permanently. 5) The final status code is 200 OK.

- Do all global redirects work properly including: 1) HTTP to HTTPS 2) WWW to non-WWW or vice versa 3) Upper to lower case URL 4) Trailing slash to non-trailing slash 5) Enforce multiple rules in one redirect versus a redirect chain.

Instructions for the checks above:

Check 1:

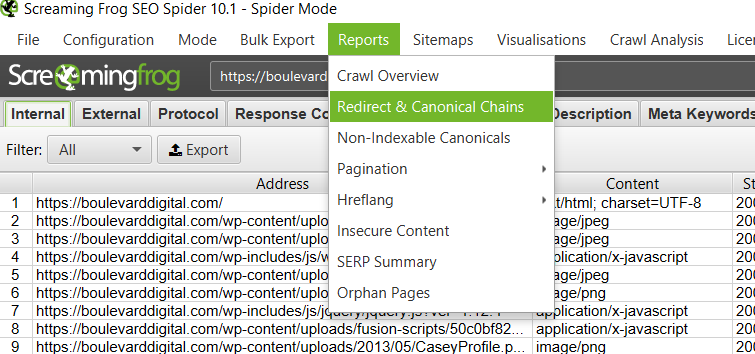

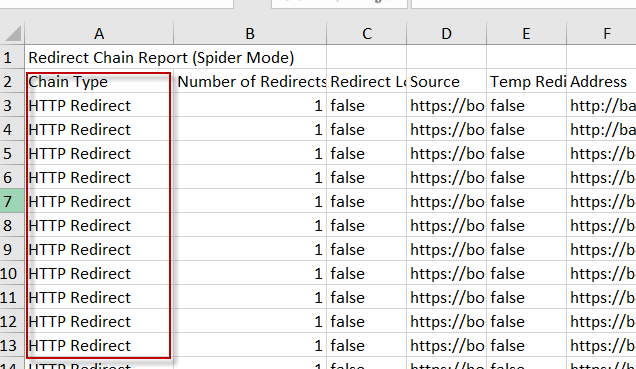

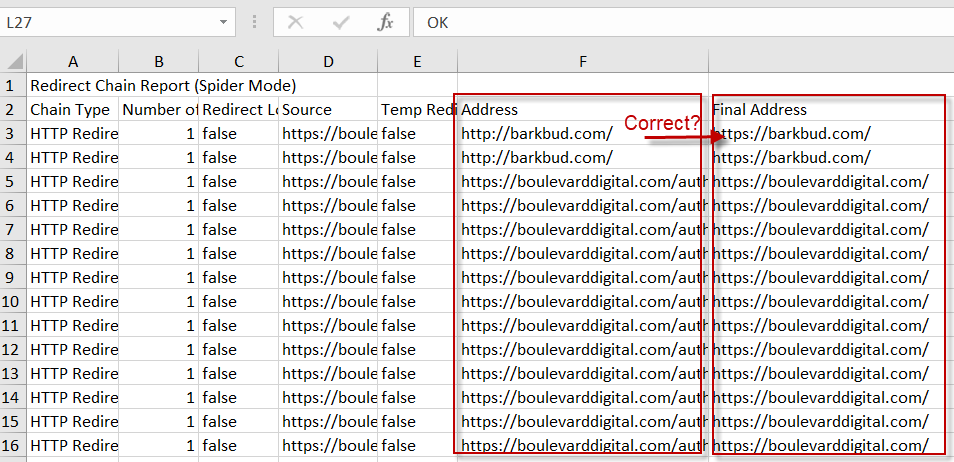

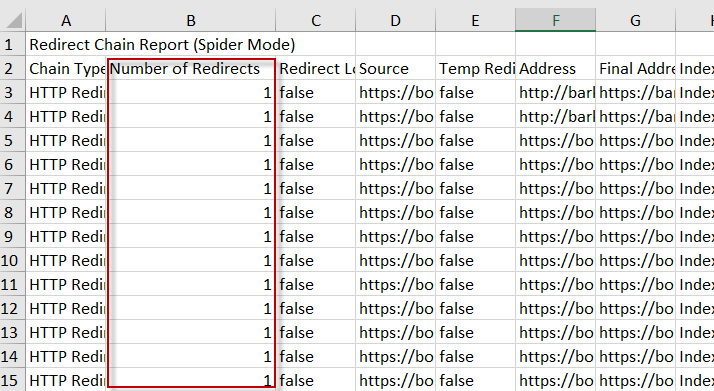

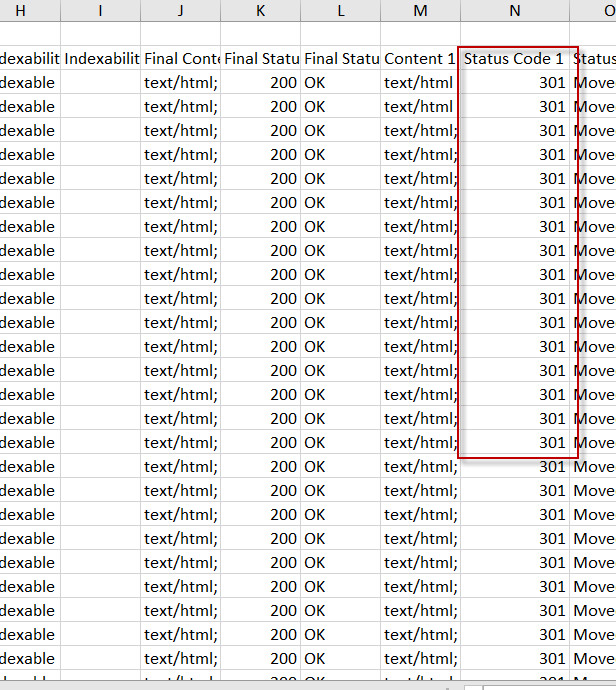

- Use screaming frog to crawl the site and then download the “Redirect and Canonical Chains” report.

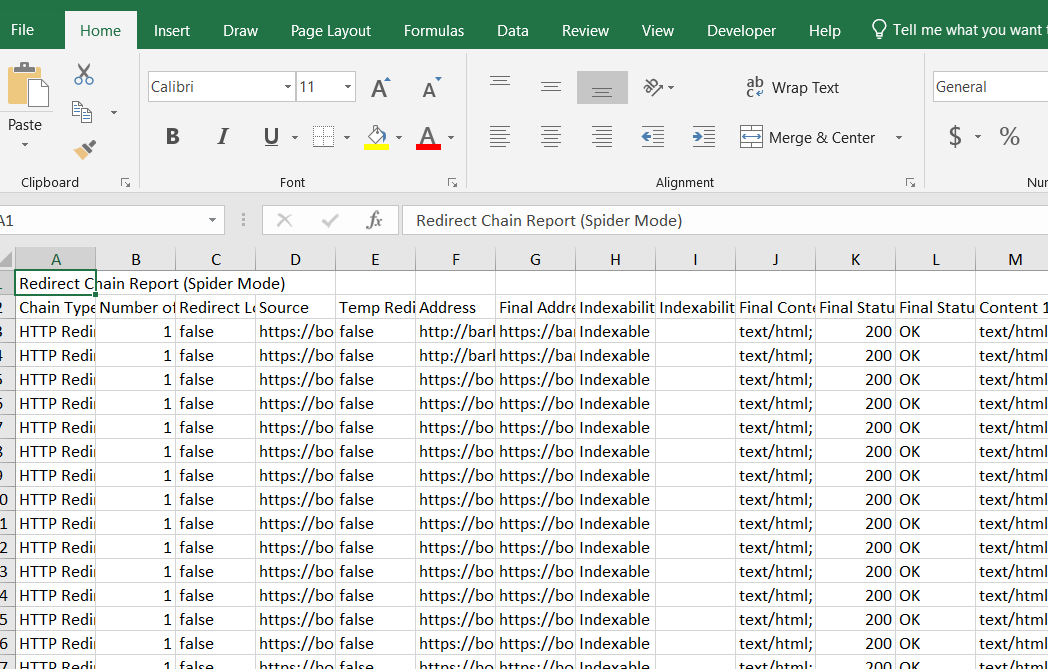

- This should give you a file you can open in excel that looks like this:

- Ensure the “Chain Type” column is set to “HTTP Redirect” and not “JavaScript” or “Meta Refresh”

- Ensure the old URL (“address” column) redirects to the correct new URL (“Final Address” column)

- Ensure there is only one redirect

- The HTTP Status Code is correct (301 for permanent redirects and 302 for temporary redirects).

- The final status code is 200 OK

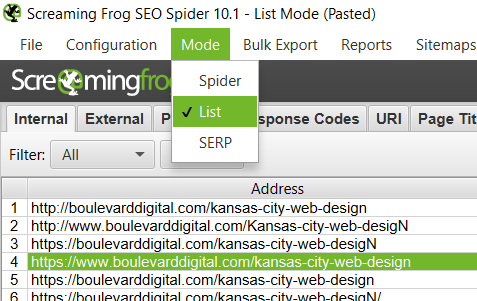

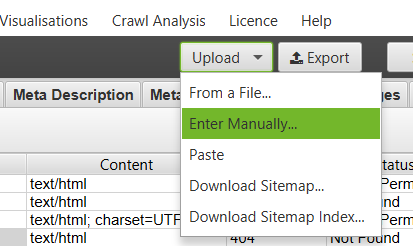

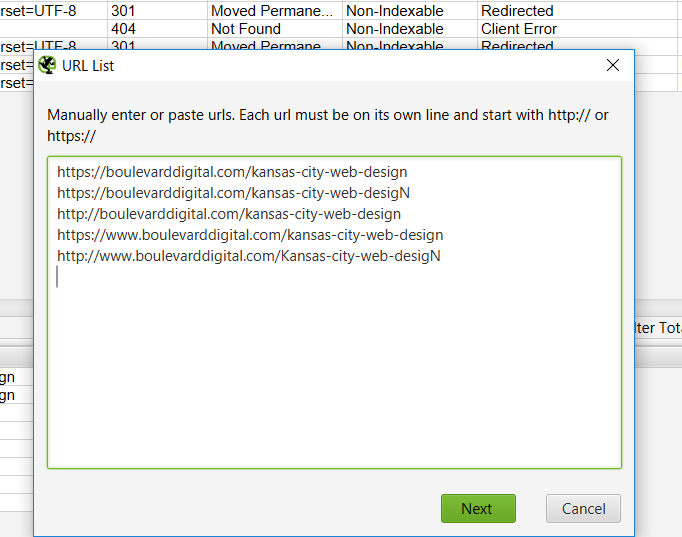

Check 2: Use screaming frog to test various URLs on your site to ensure the documented Global Rules above work:

- Upload a list of URLs you know should be redirected based on your chosen global redirect rules by opening Screaming Frog and changing your mode to “list”

- Upload > Enter manually

- Manually paste in five URLs that should trigger the redirects above:

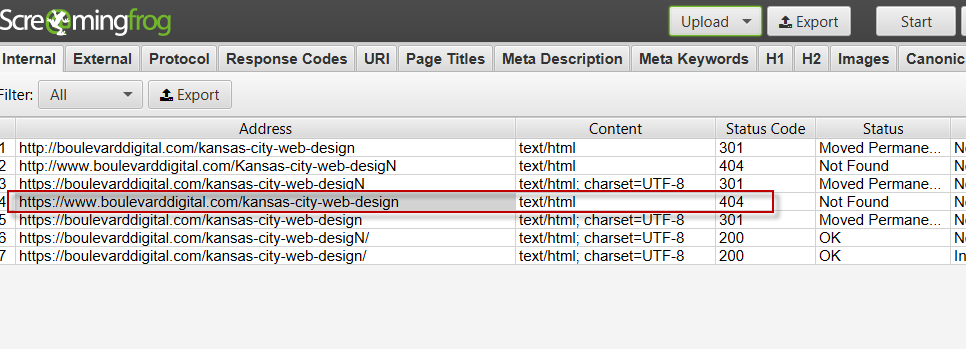

- Evaluate whether the Global redirects work on these URLs. For example, the URL https://www.boulevarddigital.com/kansas-city-web-design should have one HTTP 301 redirect to the non-www version of the URL. Instead, screaming frog shows a 404. This needs to be fixed.

Duplicate Content:

Overview:

Duplicate content occurs when you have very similar content that can be accessed on multiple URLs. A good example would be an eCommerce site that uses query strings appended to URLs to change the color of the product a user is viewing. In this case, https://ecommerce.com/product-one?color=green would have very similar content to that on https://ecommerce.com/product-one?color=red. The only difference would be the product image displayed.

Importance:

Can impact search engine rankings in the following ways:

- Search engines don’t know which version of the page to show and take their best guess. Usually, search engines get it right. However, why take the chance?

- Ranking signals like links are split between the two URLs instead of flowing towards the chosen “master” copy of the content. In our example above, some sites may link to https://ecommerce.com/product-one?color=green while others link to https://ecommerce.com/product-one?color=red. If there are no directives like a canonical tag instructing search engines which URL is the “master” copy, ranking power won’t be consolidated to the master version of the URL and thus the URL may not rank as high as it otherwise could with the correct directives.

Checks to make:

See instructions below for how to conduct each check.

- Does Google Search Console identify any duplicate content issues?

- Does Screaming Frog show any duplicate content issues?

Instructions for checks above:

- Check 1: See if Google Search Console identifies duplicate content. Open Google Search Console and then use the Index Coverage Status report to look for instances of duplicate URLs. Instructions for this process can be found here.

- Check 2: Use the Screaming Frog duplicate content check described here.

- Next steps: Once you identify the duplicate content, you’ll need to determine what to do. Duplicate content can be resolved in a wide variety of ways. A great breakdown of how to handle duplicate content can be found here.

Canonical Tags

Overview:

A canonical tag tells Google which URL has the master copy of a page. It is used to help determine which URL shows in search results when multiple URLs have identical, or very similar content.

Code Sample:

Importance:

- Ensures the “master copy” of a page appears in search results instead of one of its duplicates.

- In cases where there are multiple pages with identical, or very similar content, these tags help:

- Ensure the “master copy” of the URL is crawled and indexed

- Determine which URL shows in search results

- Help funnel ranking power to the URL with the “master copy” of the content

Checks to make:

See instructions below for how to conduct each check.

- For duplicate content that must have its own URL, is there a canonical tag pointing to an indexable URL?

- Does every page that is not a duplicate have a self-referential canonical?

- Does every page have only one canonical tag?

- Are there any canonicals pointing to non-indexable URLs?

- Do all canonical link tags use absolute versus relative paths?

Instructions for the checks above:

- Check 1: Using the internal tab of Screaming Frog described here, check every duplicate URL for the existence of a canonical tag that points to a “master” copy of that URL.

- Check 2: Use the Screaming Frog canonical tab described here to identify pages missing self-referential canonicals. A self-referential canonical means a URL has a canonical.

- Check 3: Use the Screaming Frog canonical tab described here to identify any pages with multiple canonicals.

- Check 4: Use the Screaming Frog canonical tab described here to identify any pages with non-indexable canonicals.

- Check 5: Use the Screaming Frog canonical tab described here to identify any pages with relative canonicals.

Additional resources:

Site Security

Overview:

Methods of securing the connection between websites and users, like HTTPS, help protect user data like passwords from being intercepted and abused.

Importance:

- These security measures provide a better user experience by keeping data like passwords and payment information safe.

- Many of these security measures like HTTPS are also ranking factors.

Checks to make:

See instructions below for how to conduct each check.

- Does Screaming Frog show any non-HTTPS URLs?

- Does Screaming Frog show any mixed content URLs?

- Does the site use Strict Transport Security (HSTS)?

- Is the SSL certificate expiring within a month?

Instructions for checks above:

Additional resources:

Mobile Best Practices

Overview:

These are checks to ensure your site is mobile friendly (e.g. legible text and clickable buttons) and checks to ensure Google crawls and indexes all valuable content on the mobile version of your website (whether responsive or on a separate domain).

Importance:

- If the mobile version of your site has content that differs from the desktop version of your site, this can impact your rankings.

- If your site has mobile usability errors like content being wider than the screen (causes left-to-right scrolling), text that is too small to read, or clickable elements that are too close together, Google may rank you lower for searches made on a mobile device (source).

Checks to make:

See instructions below for how to conduct each check.

- Are there URLs with content differences between desktop and mobile? If yes, fail and document.

- Does the site avoid incompatible plugins on mobile?

- Does the site have appropriately-sized text on a mobile device?

- Does the site avoid content wider than the screen on a mobile device?

- Does the site have appropriate sizing between clickable elements on mobile devices?

- Does the site specify a viewport using the meta viewport tag?

- Does the site have the viewport set to “device-width?”

- Is your site serving mobile and desktop content on different domains? If no, pass. If yes, are you following the best practices for this approach? If yes, pass. If no, document what needs to be fixed.

Instructions for checks above:

- Check 1: Conduct the Screaming Frog mobile parity audit described here.

- Checks 2-7: Use the Google Search Console mobile usability report described here. In this help center article you can find descriptions of the errors and how to fix them.

- Check 4: Is your mobile site on a different subdomain than your desktop site? For example, do you serve content to desktop users on https://www.example.com/example-page and content to mobile users on https://m.example.com/example-page? If so, are you following the best practices here? If not, document what needs to be fixed.

Additional resources:

XML Sitemap

Overview:

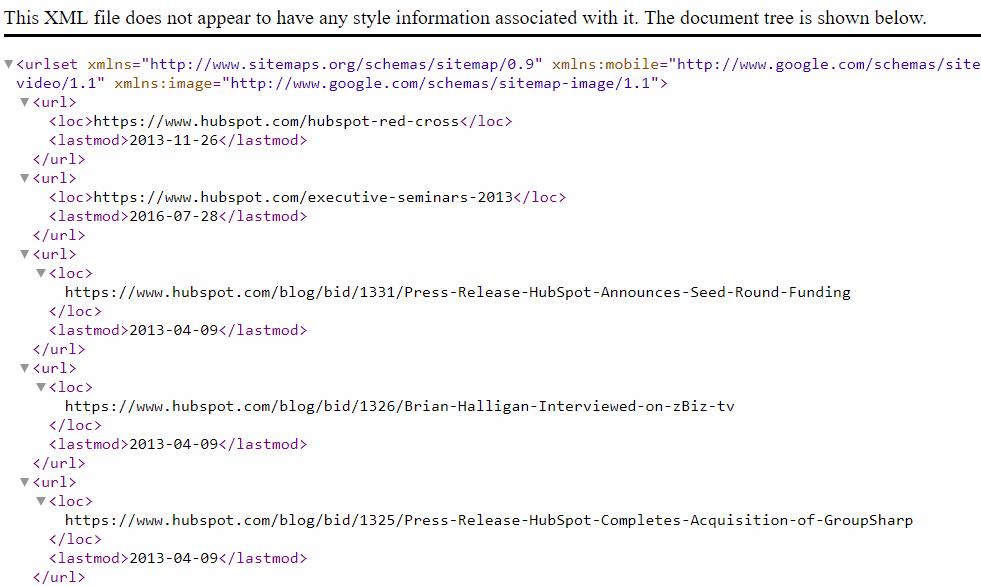

XML sitemaps can include information about the pages, videos, and images on your website. XML sitemaps help search engine crawlers find and understand all the content on your website. Here are some important notes about sitemaps:

- Websites can have a single sitemap for one piece of content (e.g. pages) or multiple sitemaps for multiple types of content (e.g. videos and images).

- Websites with multiple sitemaps will have a sitemap index file that points search engine crawlers to all the sitemaps on the site. It will look something like this: https://www.yourdomain.com/sitemap_index.xml. In this file will be links to all the sitemaps.

Code sample:

Importance:

- A correct XML sitemap helps ensure search engines like Google can crawl and index all pages on your site.

- A correct XML sitemap informs search engines like Google about various aspects of each page such as the last time it was updated.

- An incorrect XML sitemap may result in search engines like Google not consuming your sitemap or misunderstanding what they do consume. This could result in pages not being crawled, indexed, and ranked properly.

Checks to make:

See instructions below for how to conduct each check.

- Does each subdomain on the website have an XML sitemap?

- Are the XML sitemap(s) available at the correct location?

- Are the XML sitemap(s) referenced in the robots.txt file?

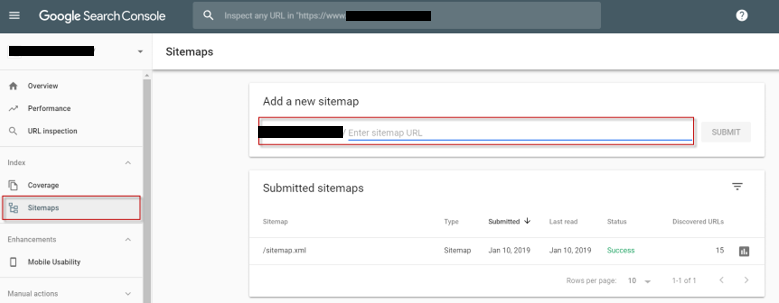

- Does Google know about the XML sitemap(s)?

- Does Google Search Console show any errors with your XML sitemap(s)?

- Do the XML sitemap(s) contain any non-indexable URLs?

- Does the website have any URLs not in the XML Sitemap(s)?

- Does each sitemap contain less than 50,000 links and/or is under 50MB?

- Are all XML sitemap URLs fully qualified (e.g. https://www.example.com/page-1 vs /page-1)?

Instructions for the checks above:

Check 1:

- First, check the GSC sitemaps report using the instructions here to see if a sitemap is listed. If you cannot find it there, check the following places:

- Look at the robots.txt file for a reference to the sitemap(s). For example, enter the URL www.yourdomain.com/robots.txt

- If the robots.txt file has no mention of sitemap(s), enter the URL where the sitemap should be found. For example, www.yourdomain.com/sitemap_index.xml (multiple sitemaps) or www.yourdomain.com/sitemap.xml (single sitemap). Note that while these are standard naming conventions, different naming conventions can be used like www.yourdomain.com/sitemap-1.xml

- Finally, try the following search to look for only XML files on yourdomain.com:

- If none of these checks reveal a sitemap, there is a pretty good chance the sitemaps either do not exist or are not optimized.

Check 2: Check to see if the XML sitemap(s) are accessible at the highest level in the subdirectory (e.g. www.yourdomain.com/sitemap.xml).

Check 3: Check to see if the XML sitemap(s) are referenced in the robots.txt file found at www.yourdomain.com/robots.txt.

Check 4-5: Navigate to the sitemap section of Google Search Console and ensure the most recent versions of the sitemap(s) have been submitted and have a status of “success.” If not, use the the instructions here to diagnose the errors.

Check 6-8: Perform a Screaming Frog XML Sitemap Audit using the instructions here.

Check 9: Review the XML sitemap looking for any relative URLs (e.g. /products versus https://subdomain.domain.com/products). A definition of relative versus absolute URLs can be found here.

Additional resources:

- Sitemaps.org – Agreed upon best practices for configuring XML sitemaps

- Sitemaps.org XML sitemap FAQs – Covers questions like where the sitemap should be placed.

- Google Webmaster Article – What are XML sitemaps and why they are important

- Google Webmaster Article – How to build an XML sitemap

Title Tag

Overview:

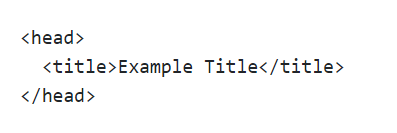

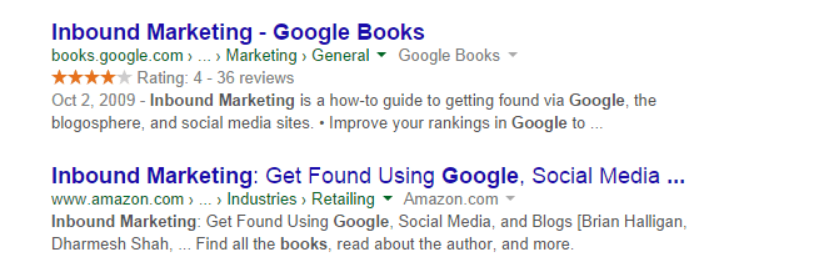

A title tag is a piece of HTML code describing the contents of a web page. It is used in three main places: 1) Search results 2) In browser tabs 3) On social sites if OG tags are not provided.

Code Sample:

Importance:

- Organic search visibility

- Directly impacts rankings by helping search engines better understand the content on your page.

- Indirectly impacts rankings by influencing the CTR of your search results.

- Organic search traffic

- Directly impacts traffic by influencing the CTR of your search results.

- User experience

- Helps ensure users have a consistent experience between clicking on your search results and landing on your page.

- Helps users understand where they are on your site by looking at the title in browser tabs.

Checks to make:

See instructions below for how to conduct each check.

- Are there any missing title tags?

- Are there any duplicate title tags?

- Are there any title tags over 60 characters?

- Are there any title tags under 30 characters?

- Are there any spelling errors?

- Are there any title tags with extra spaces?

- Are there any title tags that are not readable?

- Are there any title tags that do not make sense given the page’s content?

Instructions for checks above:

- Checks 1-4: Use the “Page Titles” tab of Screaming Frog described here.

- Check 5: Navigate to the “Content” tab described here. If the spelling and grammar error sections are blank, you’ll need to configure them and then recrawl the site using the instructions here.

- Check 6: While this should be caught in the grammar checks, it doesn’t hurt to download the crawl and ctrl+f for double spaces.

- Checks 7-8: To see if the page title is readable and makes sense, look to see if the title is meant for humans or stuffed with keywords for bots.

- For example, Green shoes sale – Cheap Green shoes | Shoes.com is hard to read and stuffed with keywords. This would be a candidate for optimization.

- For large sites, you’ll want to prioritize evaluating titles as this is difficult to do at scale.

Additional resources:

Meta Description:

Overview:

A meta description tag is a piece of HTML code describing the contents of a web page. It allows you to provide a little more detail in addition to what you’ve specified in the title tag.

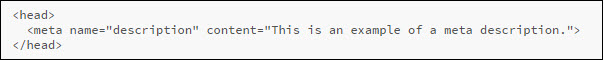

Code Sample:

Importance:

- Organic search visibility

- While the meta description tag is not a direct ranking factor, it can indirectly impact rankings by influencing the CTR of your search results.

- Organic search traffic

- Directly impacts traffic by influencing the CTR of your search results.

- User experience

- Helps ensure users have a consistent experience between clicking on your search results and landing on your page.

Checks to make:

See instructions below for how to conduct each check.

- Are there any missing description tags? If yes, fail and document the URLs.

- Are there any duplicate description tags? If yes, fail and document the URLs.

- Are there any description tags over 155 characters? If yes, fail and document the URLs.

- Are there any description tags under 70 characters? If yes, fail and document the URLs.

- Are there any spelling errors? If yes, fail and document the URLs.

- Are there any description tags with extra spaces? If yes, fail and document the URLs.

- Are there any description tags with double quotes? If yes, fail and document the URLs.

- Are there any description tags that are not readable? If yes, fail and document the URLs.

- Are there any description tags that do not make sense given the page’s content? If yes, fail and document the URLs.

Instructions for checks above:

All checks:

- Checks 1-4: Use the “Meta Description” tab of Screaming Frog described here.

- Check 5: Navigate to the “Content” tab described here. If the spelling and grammar error sections are blank, you’ll need to configure them and then recrawl the site using the instructions here.

- Check 6: While this should be caught in the grammar checks, it doesn’t hurt to download the crawl and ctrl+f for double spaces.

- Checks 7-8: To see if the description tag is readable and makes sense, look to see if the description is meant for humans or stuffed with keywords for bots.

- Ask yourself questions like “is this something meant for humans to read?” and “does the description make sense given the page’s content?”

- For large sites, you’ll want to prioritize by organic sessions or some other metric as this is difficult to do at scale.

Additional Resources:

Page Not Found Best Practices

Overview:

These are best practices for how your website should respond when a user or search engine like Google tries to visit a page that does not exist.

Importance:

- UX: Broken pages are a bad experience that can be softened with helpful language and resources that move the user along their journey as efficiently as possible. Believe it or not, if done right, they can be turned into a positive brand-promoting experience. See examples of brand-promoting 404 pages here.

- SEO: Help search engines like Google understand what content should not be indexed.

Checks to make:

See instructions below for how to conduct each check.

- When accessing a URL that does not exist on your website, do you receive a 404 HTTP response? If yes, pass. If no, fail.

- Does the 404 page clearly indicate the page cannot be found?

- Does the 404 page use friendly, branded, and helpful language?

- Does the 404 page have the navigation bar and a link to the home page?

- Does the 404 page include search functionality?

- Does the 404 page list popular or related pages the user can browse?

Instructions for checks above:

- Check 1: Enter a URL you know does not exist into Screaming Frog. Then use the status codes tab described here to see if the URL you entered contains a status code other than a 404.

- Checks 2-6: Enter a URL you know does not exist on your domain and audit it against the checks above.

Additional resources:

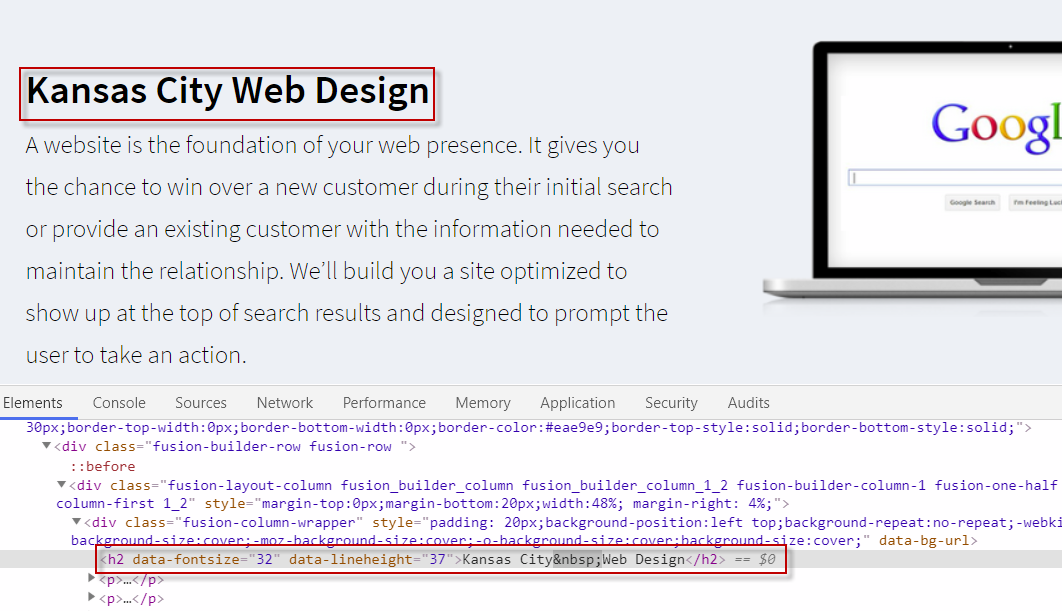

Text in images

Overview:

Text contained within images cannot be read by Google. Alt tags are not a replacement as they may not be given the same weight by Google and are meant for a different purpose.

Importance:

Having text in images can present the following issues:

- Search engines cannot read and understand the content on your page. This will result in lower rankings.

Checks to make:

See instructions below for how to conduct each check.

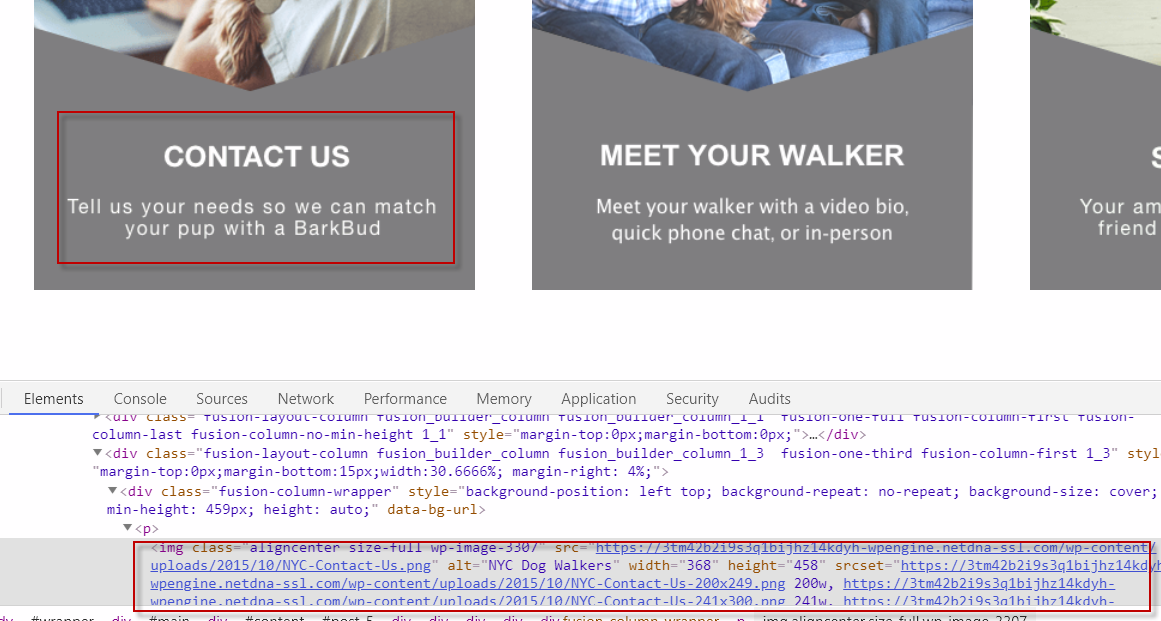

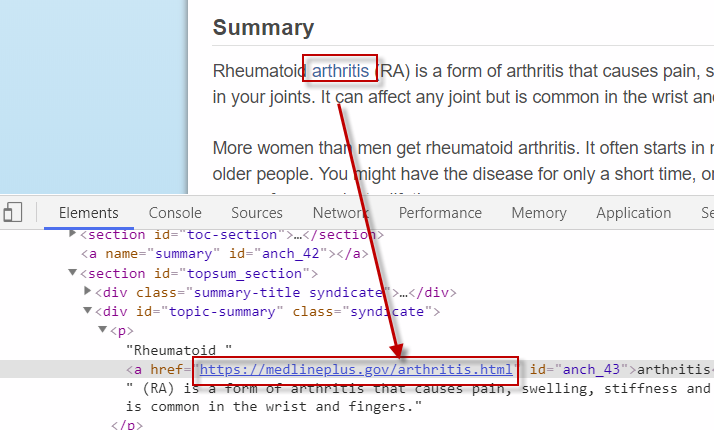

- Using Chrome inspector, do you see any important text implemented as an image?

Instructions for checks above:

Check 1:

- Look through the highest trafficked pages on your site and highlight different parts of the copy using chrome inspector. If you see the text is represented in an image, note this above.

- Here is an example of a text implemented as an image:

- Here is an example of text implemented as body copy:

Additional resources:

- Nothing at this time.

Auditing JavaScript

Overview:

Google needs to understand the content on your website in order to rank it. JavaScript, when implemented poorly, can result in your content being hidden from Google or other search engines. To better understand the relationship between Google’s Crawlers, Indexer, and JavaScript, I recommend reading the article here followed by the article here.

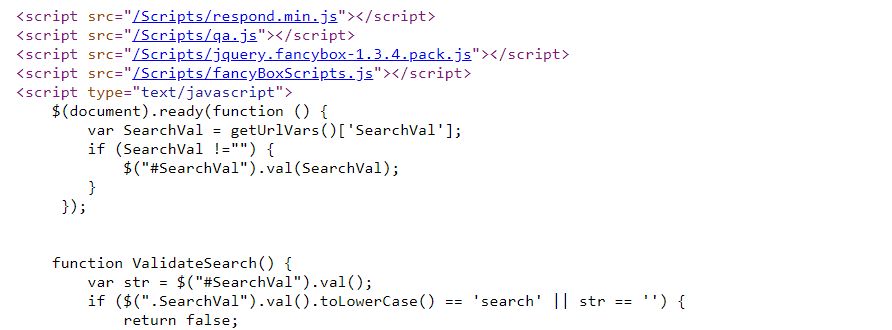

Code Sample:

Importance:

Think of HTML as the structure of a house (wood frame), CSS as the aesthetics (paint on the wall) and JavaScript as the functionality (door that opens in or out). JavaScript helps the website with a lot of its functionality. However, it can also cause problems when implemented incorrectly. Some of these problems include:

- Contradictions between tags in the raw HTML and what is changed after it has been manipulated by JavaScript. This could include a noindex tag in the raw HTML that after JavaScript code runs, changes to an index tag.

- Content not appearing until JavaScript is rendered may hide content from some browsers that either lack support for JavaScript rendering or support it at a level not close to Google.

- Content loading after Google takes a snapshot of your site to use for indexing. This could be due to content that depends on user interactions.

Checks to make:

See instructions below for how to conduct each check

- Are there differences in metadata between the raw HTML and the JavaScript-rendered HTML?

- Is there content in the JavaScript-rendered HTML that is absent from the Raw HTML?

- Are your pages missing key parts of the content when you turn off JavaScript?

- Is there any content where an action must be taken in order to see the content?

Instructions for the checks above:

Check 1: Run the Screaming Frog JavaScript parity audit described here and then use the JavaScript tab described here to look for differences in metadata between the raw HTML and the JavaScript-rendered HTML. Note any differences between the different elements including title, description, robots, and canonical tags.

Check 2: Run the Screaming Frog JavaScript parity audit described here and then use the JavaScript tab described here to look for differences in word count between the raw HTML and JavaScript-rendered HTML.

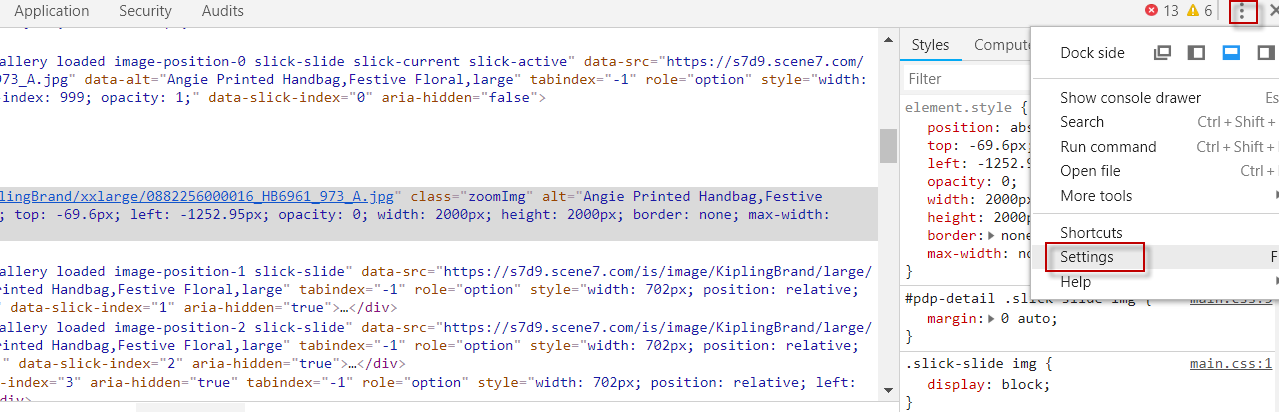

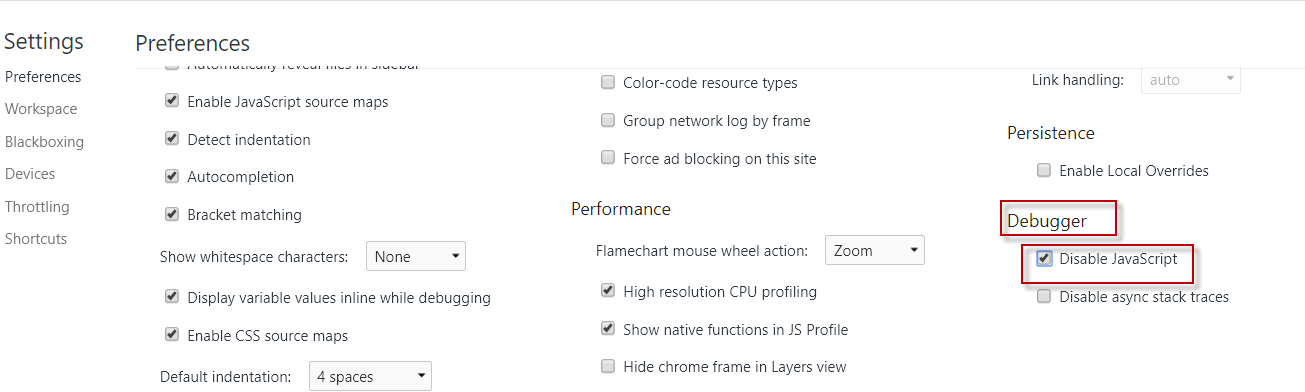

Check 3:

- Is your page missing key parts of the content when you turn off JavaScript? While Google may be able to see this content, other search engines may not. To test this, use Chrome Developer Tools to view a page without JavaScript

- Go to inspect element (CTRL + Shift + I on pc) > Ellipses > Settings

- Scroll down until you see debugger and then click on disable JavaScript

- Refresh the browser

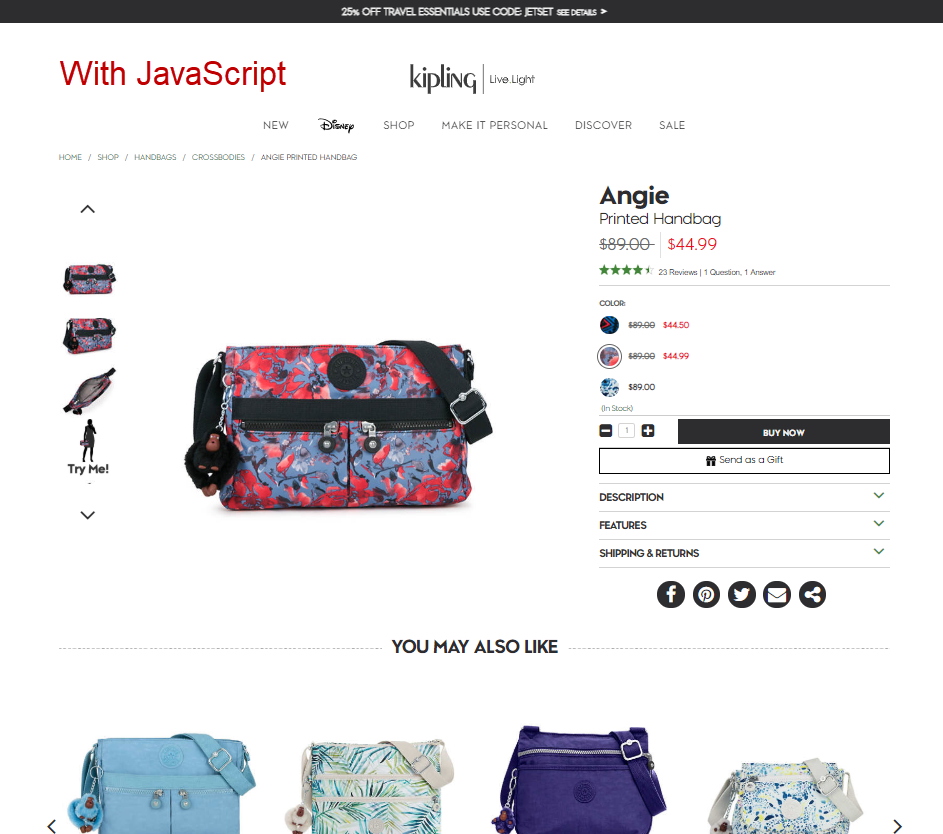

- This will now show you any content missing when JavaScript is not available. See below for two examples, one with JavaScript enabled and one without it enabled.

- You can see how the second example is missing content. Take note of any missing content.

Check 4:

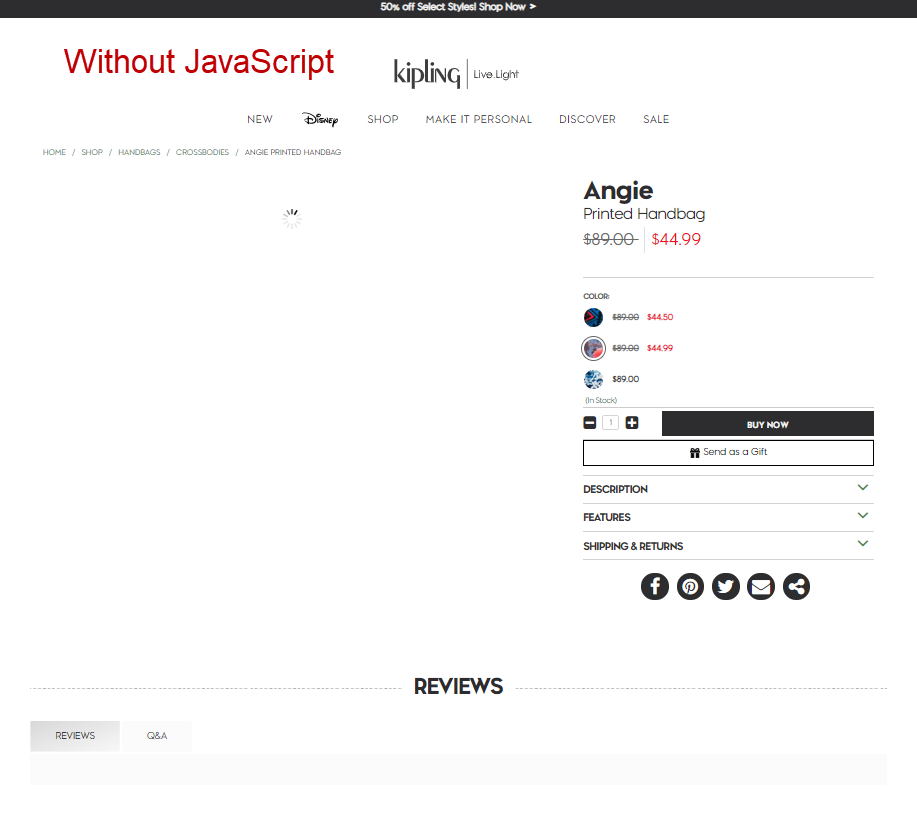

- Is there any content where an action must be taken in order to see the content? There may be an automated way to do this but I’m not aware of it at the moment. I’d look for common elements that are used to “hide/display” content like tabs and ensure these tabs are “hiding/displaying” content based on CSS versus JavaScript. If they display content based on JavaScript, this content is likely to not be indexed by Google and other search engines.

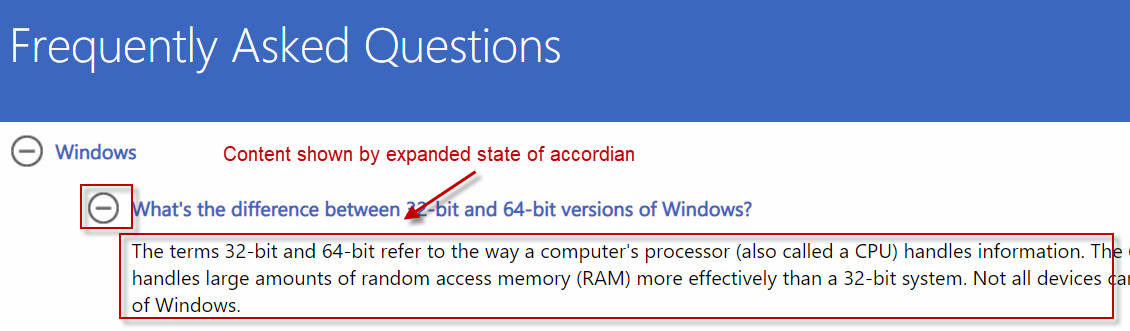

- Here’s an example: I find that some of the content on the following page is hidden under a collapsed accordion tab:

- This content could be hidden by CSS or JavaScript. If it is hidden by CSS, it is in the raw HTML and not an issue. If it is hidden using JavaScript, it can become an issue as Google and other search engines may not see it as they’re not going to go through and click every expansion tab. To test this, we’ll want to look at the raw HTML to see if we can find the text from the expanded box. If we can find it, this means the text is NOT dependent on user-prompted JavaScript rendering.

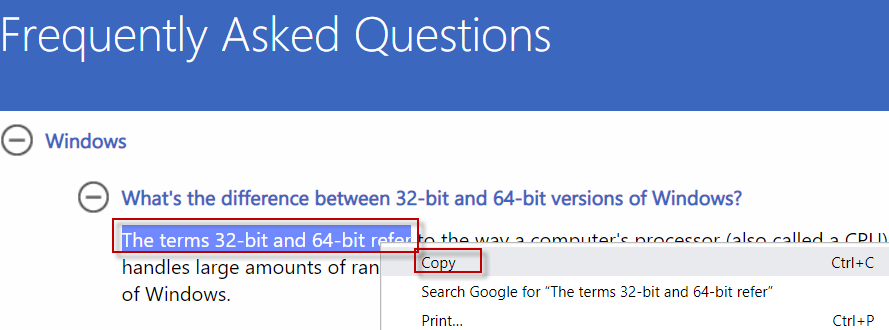

- Start by copying a small portion of the “hidden” content and then searching for this in the raw HTML.

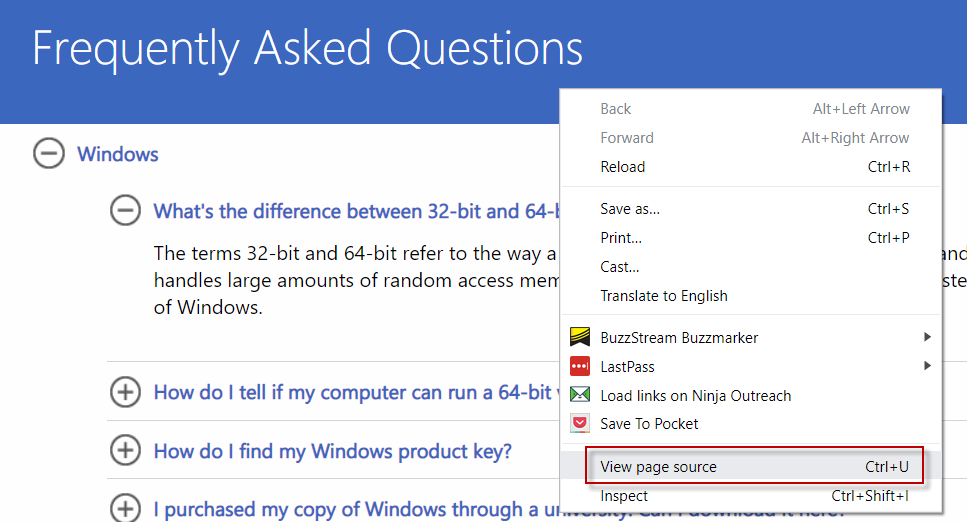

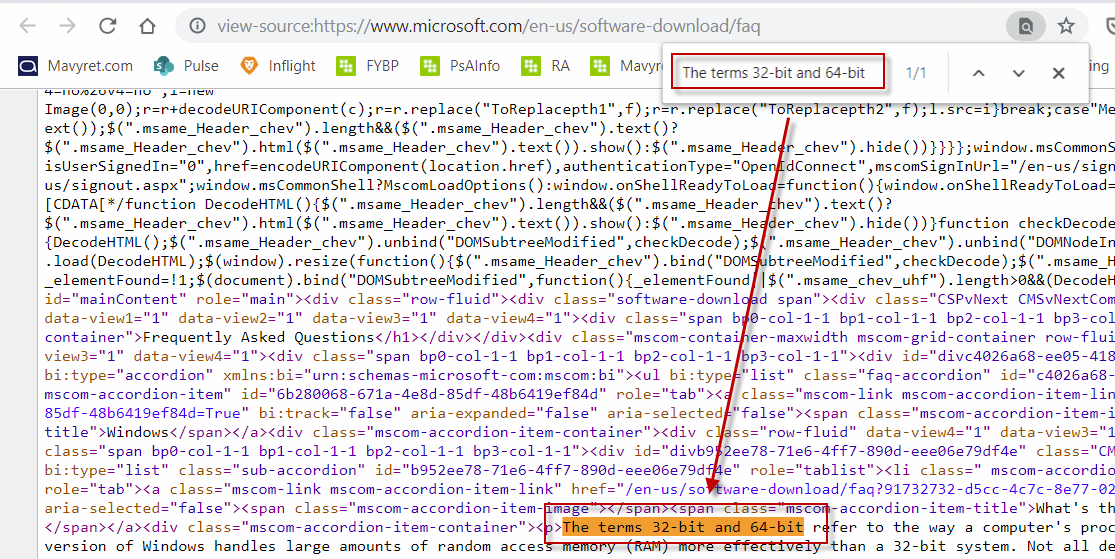

- Then, search for this in the raw HTML by viewing the source of the page.

- Given I can find the text in the raw-HTML, I know it can be parsed and indexed by Google and other Search Engines without having to click the expand button. If I could not find it in the code, this could mean this text is loaded via JavaScript which could cause issues and would be something I’d want to note.

Additional Resources:

Internal Linking

Overview:

Internal links are those from one page on your site to another page on your site.

Code Sample:

Importance:

- Help users navigate your site.

- Help search engines crawl your site.

- Help search engines understand site architecture and page hierarchy.

- Help you rank higher by passing ranking power through to different pages on your site.

Checks to make:

See instructions below for how to conduct each check

- Are there orphan pages?

- Do any of your internal links have the nofollow attribute?

- Are there opportunities to improve the anchor text for your internal links?

- Are you using JavaScript (JS) to link to important pages on your site?

- Do pages on your site with the most ranking power have internal links to other pages?

- Do you have pages with very low internal link counts?

Instructions for the checks above:

Check 1: Use Screaming Frog to identify orphan pages by following the guide here.

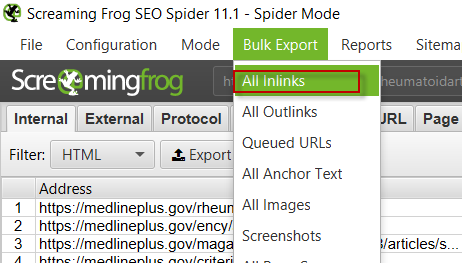

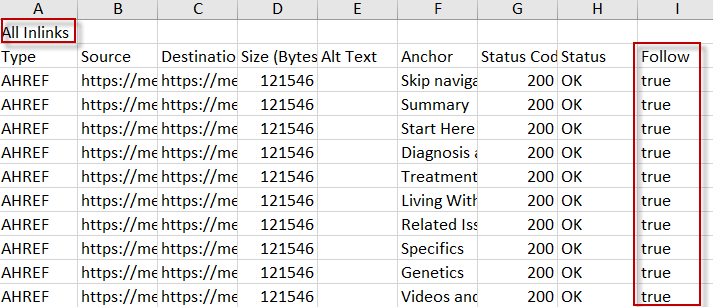

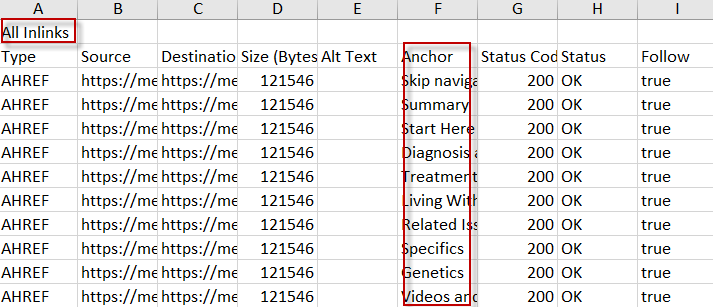

Checks 2-4:

- Crawl your site and Bulk Export All Inlinks using the screenshots below as a reference.

- Do any of your links have the nofollow attribute? You can check this by looking at the “Follow” column in the “All Inlinks” report you downloaded:

- Are any of your links missing descriptive anchor text? You can check this by looking at the “Anchor” column in the “All Inlinks” report you downloaded:

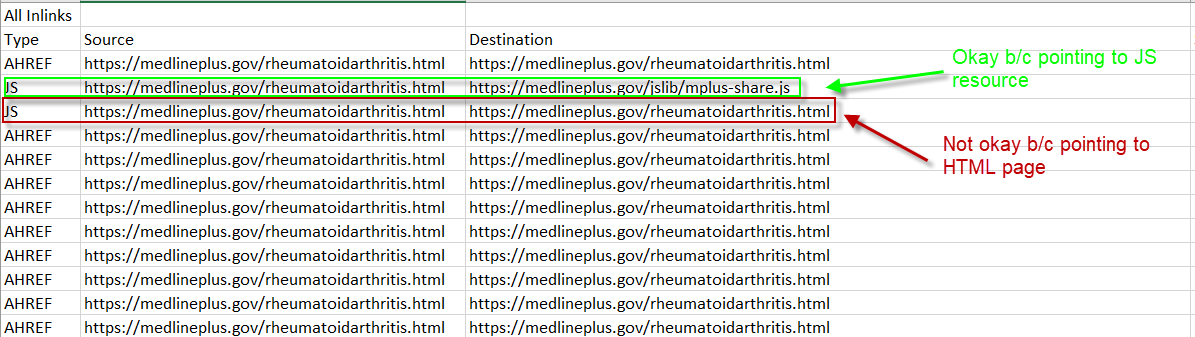

- Are you using JavaScript (JS) to link to important pages on your site? You can check this by looking at the “Type” column in the “All Inlinks” report you downloaded. Filter the “Type” column for JS, and then see if the Destination URL points at any important pages. Note that it is probably okay if you see JS links pointing to non-html pages. Below is an example of good and bad JS links.

Check 5:

Do pages on your site with the most ranking power have internal links to other pages? Use a proxy for ranking power like Moz’s Page Authority and then ensure these pages have links out to other important pages.

Additional resources:

Website Speed Best Practices

Overview:

Website speed determines how fast users and search engines can access, understand, and interact with your content.

Importance:

- Organic search visibility

- Directly impacts rankings as Google wants searchers to have fast access to the pages it displays in search results.

- Indirectly impacts rankings as a slower site reduces the chance of other websites linking back to your website.

- Website engagement

- A faster website reduces the chance someone will leave before engaging with your site (e.g. signing up for your email stream) due to a poor website experience.

- User experience

- Helps ensure users have a good experience on your website.

Checks to make:

See instructions below for how to conduct each check

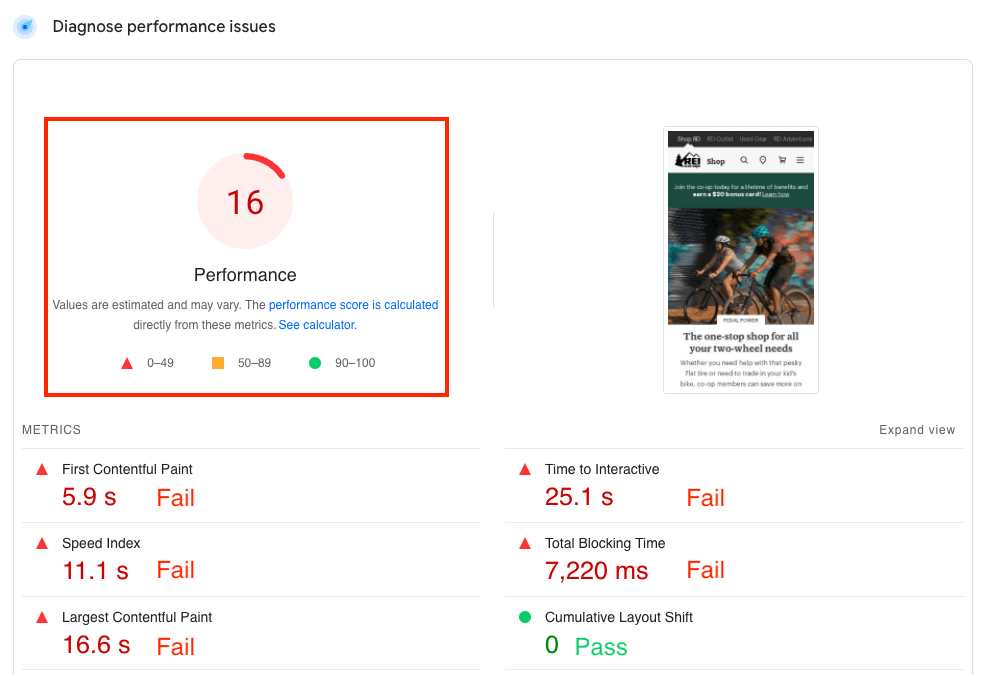

- Did Core Web Vitals show a passing score? If Core Web Vitals information is not available, is the Page Performance score above a 90%?

- Does your site use HTTP/2 or HTTP/3?

Instructions for the checks above:

Evaluating page speed can be pretty technical and deserves its own audit. Fortunately, tools like Google Page Speed Insights ease the burden of this auditing process. While these tools will not catch everything, they’ll be a good start to a comprehensive page speed audit and will suffice for this portion of the technical audit.

Check 1:

- Use Google Page Speed Insights to generate a page speed report. It is important to note the following:

- This tool evaluates on a page level versus a site level. For smaller websites, this shouldn’t be a problem. For larger websites, you may want to use a tool like Screaming Frog that allows you to pull the Google Page Speed report over a very large number of pages per the instructions here.

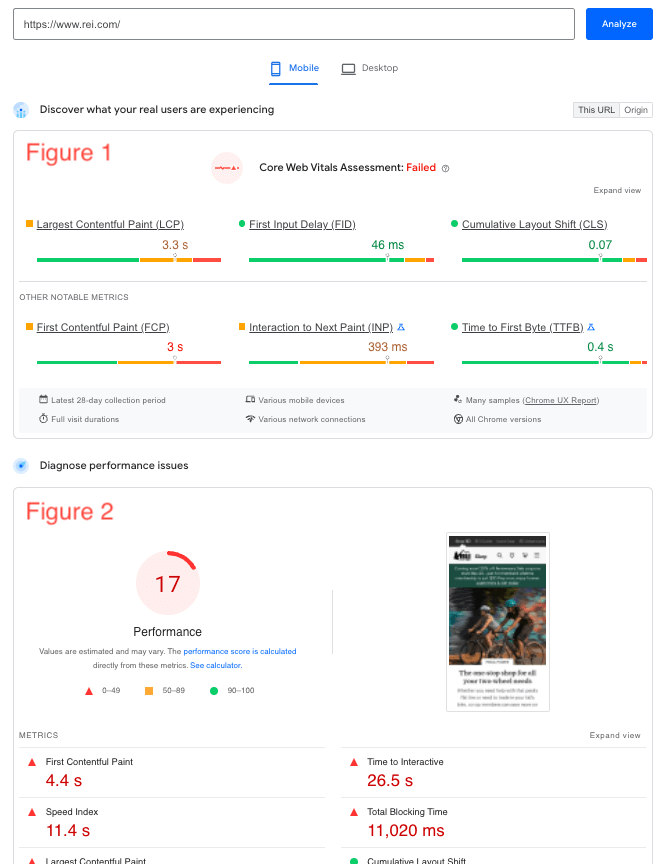

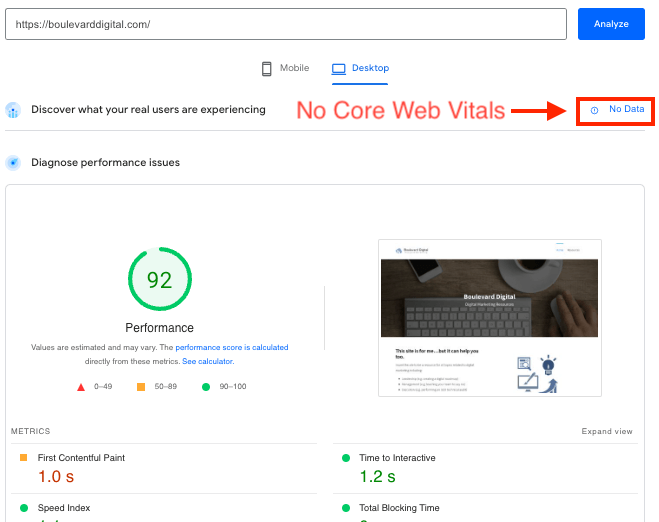

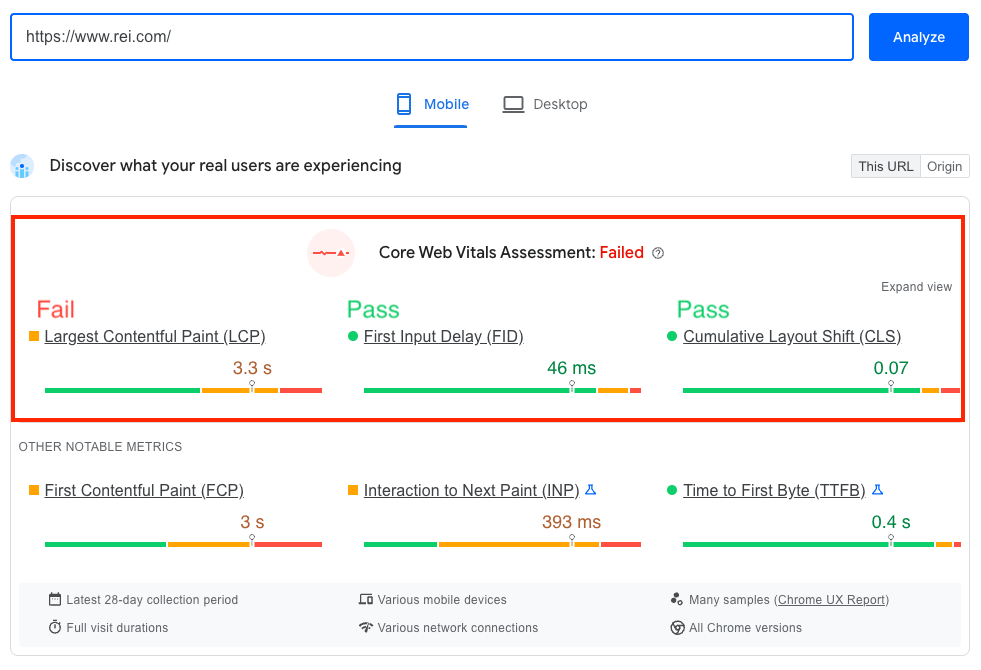

- This report will be broken into two parts. The first part is the Core Web Vitals score and associated metrics (figure 1). This is based on real-world data measured across a variety of devices using the Chrome browser. The second part is the Page Performance score and associated metrics (figure 2). This is based on simulated data. The difference between the simulated data that populates the Page Performance section and the real-world data that populates the Core Web Vitals section can be found here. While the Page Performance score is helpful, Google puts more weight into the Core Web Vitals score when determining rankings.

-

- Core Web Vitals information may not be available for your site at all, or only for a certain number of pages. This is common when your website does not get enough traffic for Core Web Vitals to make an assessment. In this case, you’ll need to rely on the Page Performance metrics.

- Once the report is generated, note whether the page failed Core Web Vitals. If so, note what specific metrics are failing.

- If Core Web Vital metrics are not available, note the performance score. If the overall score is less than 90%, note which specific metrics score less than 90%.

- Check 2: Does your site use HTTP/2 or HTTP/3?

- HTTP/2 allows for faster page speed loading times over HTTP/1.1 due to the factors described here. If the website you are evaluating is not using HTTP/2, this should be an easy update for your developer and a quick win for SEO and user experience. The easiest way to check if HTTP/2 is implemented on the website you’re evaluating is to install the chrome extension here.

- HTTP/3 is the next iteration of HTTP. As of May 2022, this can be somewhat challenging for developers to implement as it is still relatively new. However, if your developer is willing to implement it, you can expect faster site speeds for website visitors. The easiest way to check if HTTP/3 is implemented is to install the chrome extension here.

Additional resources:

Image Best Practices

Overview:

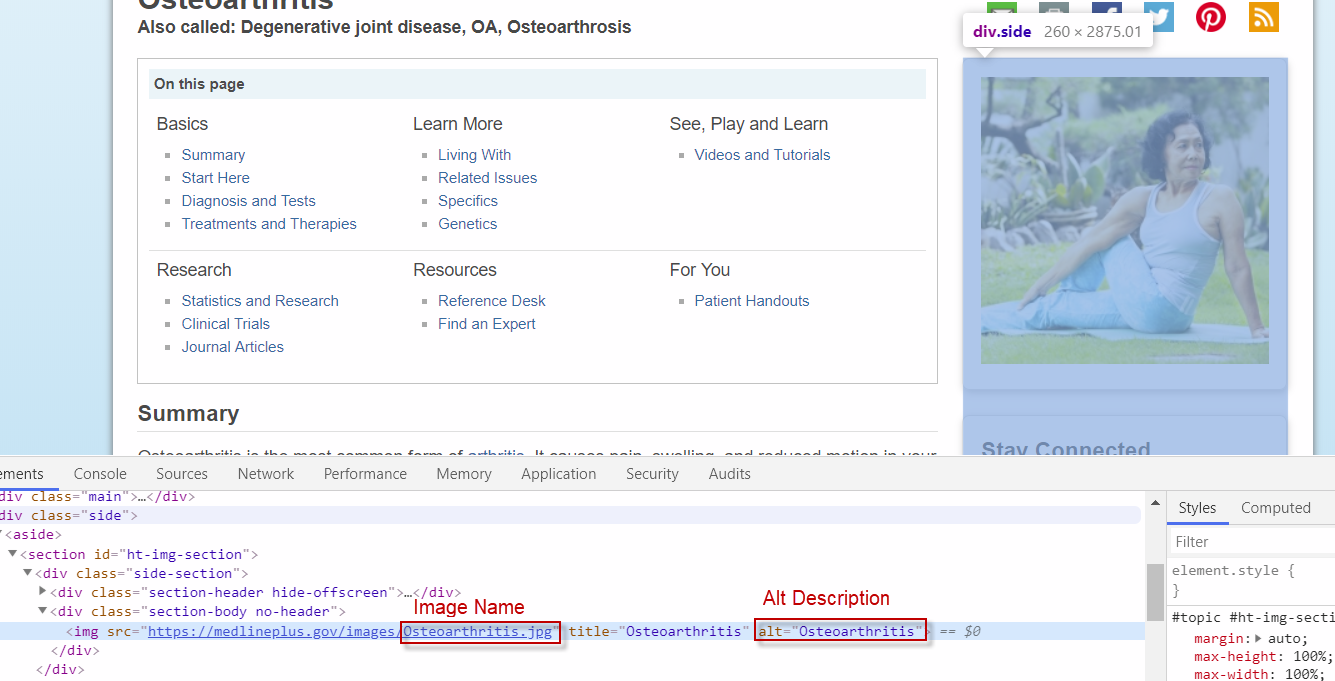

Image best practices include adding alt text to images, having descriptive image names, and ensuring the image does not cause page load time issues. How the image impacts page load time is already covered in the website speed section of this audit.

Code Sample:

Importance:

- Accessibility: Visually impaired users will use screen readers which are tools that read out loud the text on a page. These screen readers will read the image alt text to describe the images to the visually impaired user.

- Search engines: Alt text and image names are used by search engines to better understand the content on a page (source). While search engines are getting better at understanding images, this text can still help by putting the image in the context of the page. Again, the more Google understands the value of your content, the better you’ll rank.

Checks to make:

See instructions below for how to conduct each check

- Is there missing alt text for any images?

- Do all existing alt tags properly describe the image?

- Are the alt tags short enough?

- Do any alt descriptions include the text “image of” or “picture of?

- Are there any image names that do not accurately describe the image?

Instructions for the checks above:

- All checks: Use the “Images” tab of Screaming Frog described here.

- For large sites, you’ll want to prioritize evaluating images as this is difficult to do at scale.

Additional resources:

Structured Data

Overview:

Structured data is additional information provided to search engines beyond what is in the HTML markup. It allows website owners to provide more information about pages, products, organizations, and many other entities presented to the user. This information can be used by the search engine to better understand the content and enhance how it appears in search results.

Code Sample: (JSON-LD):

Importance:

While there is no generic ranking boost for the presence of structured data, if it helps search engines better understand a page’s relevance to a query, it can impact rankings. More importantly though, is how structured data can improve a website’s appearance in search results which can directly impact traffic. For example, see the two search results below. The first one has structured data allowing it to add the rating and publication date. This may give it a higher CTR than the other example that does not have these features.

Checks to make:

A complete review of a website’s structured data status and potential is not the purpose of this audit. Instead, we check for the following:

- Do any pages have structured data that is not validating?

- Is there content that Google can optimize in search results based on the structured data features listed here?

Instructions for the checks above:

Check 1: Use Screaming Frog’s structured data testing tool described here.

Check 2: Review the structured data features listed here and identify any applicable to the website you are auditing.

Additional resources:

Heading Tags (H1-H6)

Overview:

Heading tags differentiate headings from body copy. They contribute to the hierarchical structure of your content which helps both users and search engines better understand and consume your content.

Code Sample:

Importance:

- Help users navigate your site, especially when engaged in scanning, a common way of consuming content on the web. Most factors that help users indirectly benefit rankings.

- Help search engines understand the hierarchy of your content.

- Can increase your chances of appearing in a quick answer box.

Checks to make:

See instructions below for how to conduct each check

- Does your site have an H1 tag for each page? If no, fail and document the URLs.

- Does your site have multiple occurrences of an H1 tag on the same page? If yes, fail and document the URLs.

- Does your site have the same H1 tag on multiple pages? If yes, fail and document the URLs.

- Do your H1s represent the main idea of the page? If no, fail and document the URLs.

- Do your pages follow a clear structure of H1s followed by H2s and then H3s? If no, fail and document the URLs.

Instructions for the checks above:

- Checks 1-3: Use the “H1” tab of Screaming Frog described here.

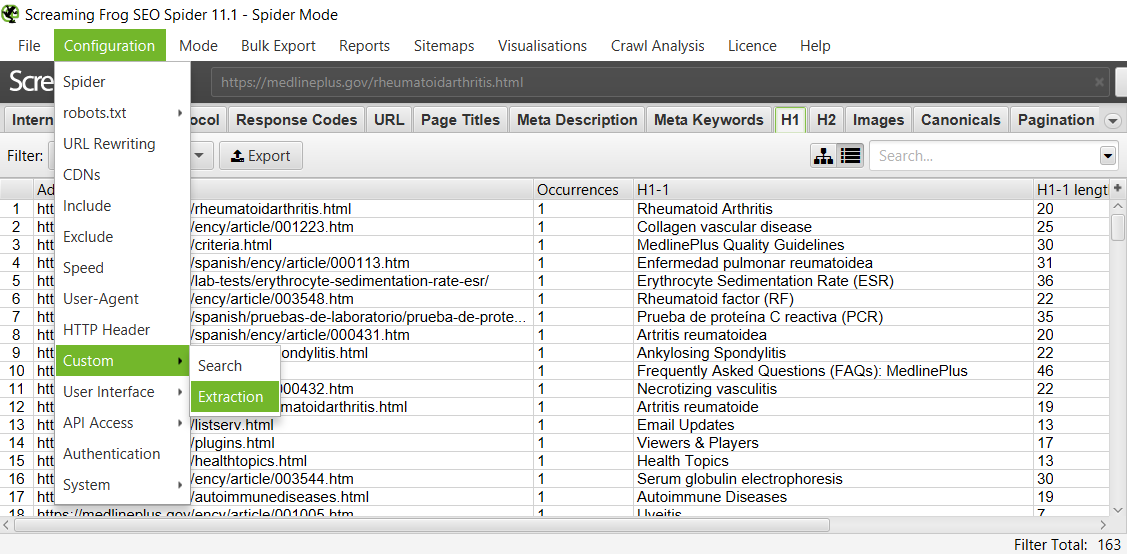

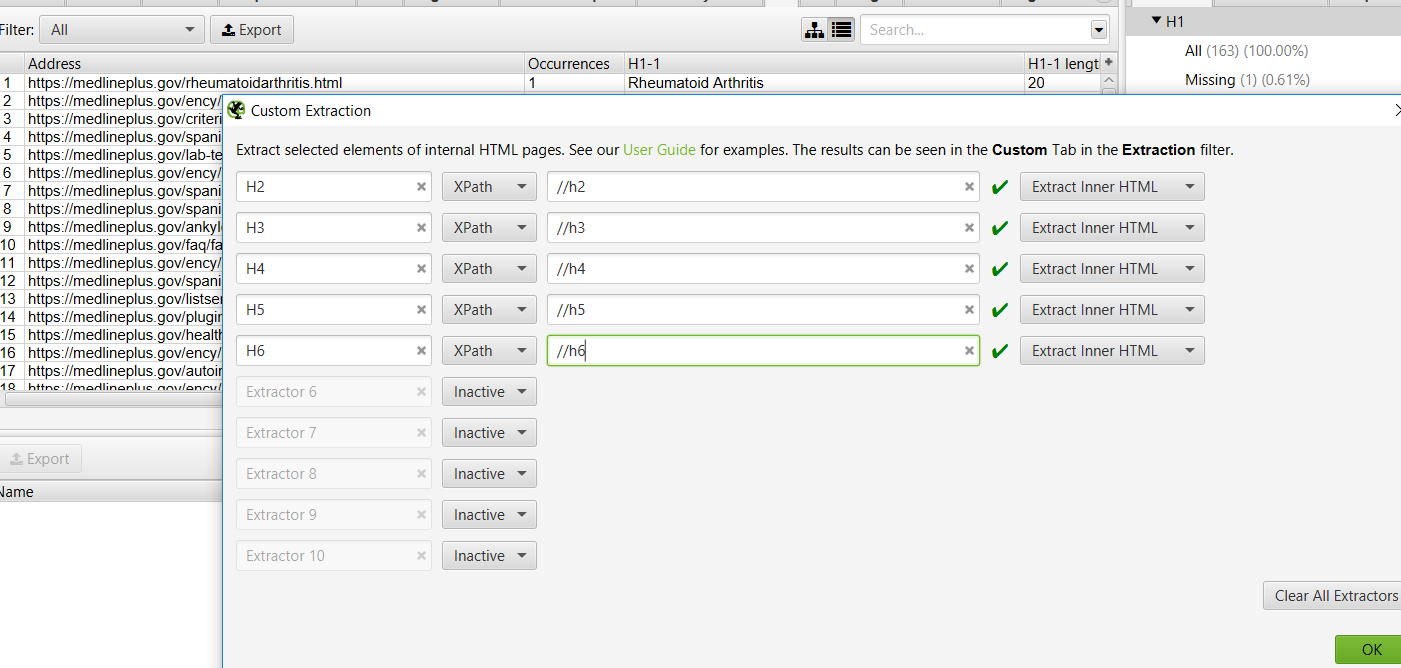

- Checks 4-5: Check the H1-H6 tags by setting up a custom extraction in Screaming Frog by going to Configuration > Custom > Extraction

-

- Once there, enter the following values:

-

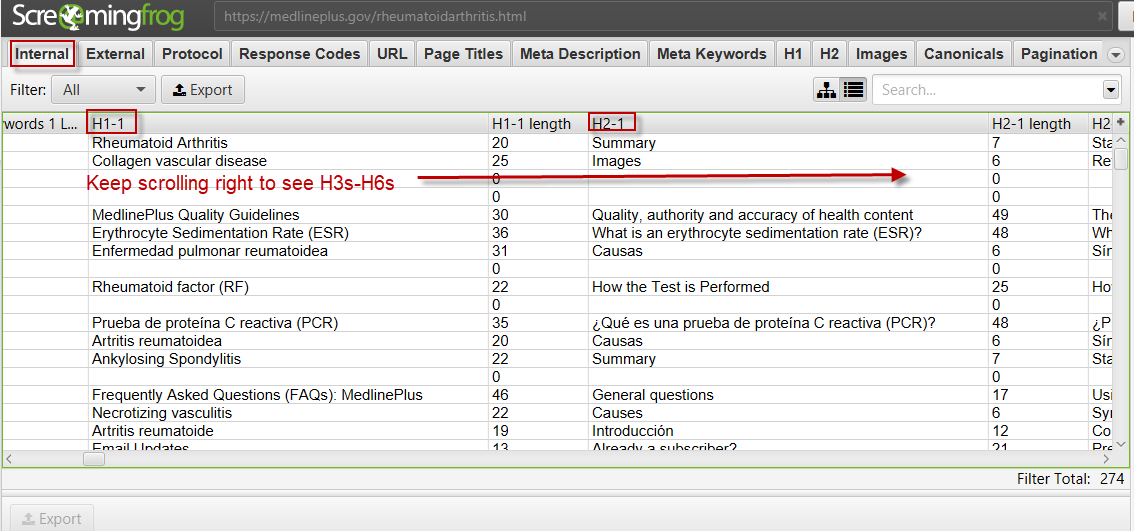

- Click OK then crawl the site. This will produce a report that will show you all H2-H6s on your site when you are on the “internal” tab.

-

- For large sites, you may need to limit your evaluation to a percentage of pages selected based on a metric such as organic sessions.

Additional resources:

URL Best Practices

Overview:

URLs are used by users and search engines to access various types of content on the internet. This content can include web pages, images, PDFs, etc. A great introduction to URLs and their structure can be found here.

Importance:

- An optimized URL helps the user experience in the following ways:

- Along with title and description, optimized links help users understand the destination of a link within search results.

- Help users understand where they are on a website.

- Helps users share content. Would you rather send your friend the link jensshoes.com/1245_12&12?id=12&sessionid=23 or jensshoes.com/slippers/red-slippers? One encourages sharing. The other does not.

- An optimized URL helps search engines better understand your content. If you have good content, this will benefit you.

Checks to make:

See instructions below for how to conduct each check

- Are there any URLs that are hard to understand as far as where they would take a user?

- Are all URLs using hyphens to separate words?

- Are there any URLs using underscores or spaces to separate words?

- Are there any URLs that trigger one of your global redirect rules?

- Are there any URLs using filler words like “a,” “and,” or “the?”

- Are there any URLs with session IDs?

- Are there any URLs with extensions like .html?

- Are there any URLs that appear to be unnecessarily long?

- Are there any URLs with obvious keyword stuffing?

Instructions for the checks above:

Crawl the site using Screaming Frog and then navigate to the URL tab described here to complete these checks:

- Check 1: Are there any URLs that are hard to understand as far as where they would take a user?

- Check 2: Are all URLs using hyphens to separate words? For example, search engines and users prefer example.com/green-dress over example.com/greendress.

- Check 3: Are there any URLs using underscores or spaces to separate words? For example, example.com/green_dress or example.com/green dress.

- Check 4: Are there any URLs that trigger one of your global redirect rules? For example, if you have a redirect rule to enforce upper to lower case letters, and you have the URL example.com/Green-Shoes somewhere on your site, this URL will always be redirected. This impacts user experience by requiring extra time to redirect and load the page.

- Check 5: Are there any URLs using filler words like “a,” “and,” or “the?” Usually, these words are not needed and add to the length of a URL without providing any value.

- Check 6: Are there any URLs with session IDs? Session IDs look something like this: example.com/page?sid=12ak5r where there is a question mark followed by a key value pair like “id=X.”

- Check 7: Are there any URLs with extensions like .html?

- Check 8: Are there any URLs that appear to be unnecessarily long?

- Check 9: Are there any URLs with obvious keyword stuffing? For example, a URL that appears like this: products/wii/best-prices/lowest-price/cheap-wii-products/mariokart-best-price/lowest-price-mariokart/mariokart-with-wii-wheel-cheapest-price.html

Additional resources:

Favicon

Overview:

Favicons appear in search results, browser tabs, search history, and your browser’s bookmarks.

Importance:

- More organic traffic

- Given their prominence in Google search results, favicons can directly impact clicks to your site from search results.

- Better user experience

- Helps a searcher navigate their crowded search tabs or search history.

Checks to make:

See instructions below for how to conduct each check

- Does your site have a favicon consistent with your brand (e.g. logo), unique from your organic search competitors, and relevant to the searches for which it will be displayed?

- Does your favicon follow the best practices here including all sizes necessary for different mobile devices?

Instructions for the checks above:

Check 1: Check to ensure you have a favicon that is consistent with your brand, unique, and relevant in the following places:

- Google mobile search results

- Browser tabs

- Search history

- Bookmarks

Check 2: Ensure your favicon matches the best practices here.

Additional resources:

Now go make the world a better place!

A technical SEO audit identifies issues affecting a website’s ability to attract customers. Once fixed, the website will be able to attract more customers and delight them with its services or products. In this small way, a technical SEO audit contributes to a better world!